This content has been machine translated dynamically.

Dieser Inhalt ist eine maschinelle Übersetzung, die dynamisch erstellt wurde. (Haftungsausschluss)

Cet article a été traduit automatiquement de manière dynamique. (Clause de non responsabilité)

Este artículo lo ha traducido una máquina de forma dinámica. (Aviso legal)

此内容已经过机器动态翻译。 放弃

このコンテンツは動的に機械翻訳されています。免責事項

이 콘텐츠는 동적으로 기계 번역되었습니다. 책임 부인

Este texto foi traduzido automaticamente. (Aviso legal)

Questo contenuto è stato tradotto dinamicamente con traduzione automatica.(Esclusione di responsabilità))

This article has been machine translated.

Dieser Artikel wurde maschinell übersetzt. (Haftungsausschluss)

Ce article a été traduit automatiquement. (Clause de non responsabilité)

Este artículo ha sido traducido automáticamente. (Aviso legal)

この記事は機械翻訳されています.免責事項

이 기사는 기계 번역되었습니다.책임 부인

Este artigo foi traduzido automaticamente.(Aviso legal)

这篇文章已经过机器翻译.放弃

Questo articolo è stato tradotto automaticamente.(Esclusione di responsabilità))

Translation failed!

Speed optimization

Most TCP implementations do not perform well over WAN links. To name just two problems, the standard TCP retransmission algorithms (Selective Acknowledgments and TCP Fast Recovery) are inadequate for links with high loss rates, and do not consider the needs of short-lived transactional connections.

Citrix SD-WAN WANOP implements a broad spectrum of WAN optimizations to keep the data flowing under all kinds of adverse conditions. These optimizations work transparently to ensure that the data arrives at its destination as quickly as possible.

WAN optimization operates transparently and requires no configuration.

WAN optimization is a standard feature on all Citrix SD-WAN WANOP appliances.

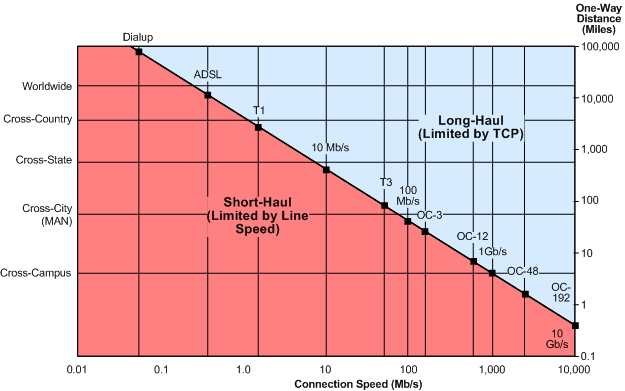

The figure below shows the transfer speeds possible at various distances, without acceleration, when the endpoints use standard TCP (TCP Reno). For example, gigabit throughputs are possible without acceleration within a radius of a few miles, 100 Mbps is attainable to less than 100 miles, and throughput on a worldwide connection is limited to less than 1 Mbps, regardless of the actual speed of the link. With acceleration, however, the speeds above the diagonal line become available to applications. Distance is no longer a limiting factor.

Figure 1. Non-accelerated TCP Performance Plummets With Distance

Note

Without Citrix acceleration, TCP throughput is inversely proportional to distance, making it impossible to extract the full bandwidth of long-distance, high-speed links. With acceleration, the distance factor disappears, and the full speed of a link can be used at any distance. (Chart based on model by Mathis, et al, Pittsburgh Supercomputer Center.)

Accelerated transfer performance is approximately equal to the link bandwidth. The transfer speed is not only higher than with unaccelerated TCP, but is also much more constant in the face of changing network conditions. The effect is to make distant connections behave as if they were local. User-perceived responsiveness remains constant regardless of link utilization. Unlike normal TCP, with which a WAN operating at 90% utilization is useless for interactive tasks, an accelerated link has the same responsiveness at 90% link utilization as at 10%.

With short-haul connections (ones that fall below the diagonal line in the figure above), little or no acceleration takes place under good network conditions, but if the network becomes degraded, performance drops off much more slowly than with ordinary TCP.

Non-TCP traffic, such as UDP, is not accelerated. However, it is still managed by the traffic shaper.

Example

One example of advanced TCP optimizations is a retransmission optimization called transactional mode. A peculiarity of TCP is that, if the last packet in a transaction is dropped, its loss not noticed by the sender until a receiver timeout (RTO) period has elapsed. This delay, which is always at least one second long, and often longer, is the cause of the multiple-second delays seen on lossy links-delays that make interactive sessions unpleasant or impossible.

Transactional mode solves this problem by automatically retransmitting the final packet of a transaction after a brief delay. Therefore, an RTO does not happen unless both copies are dropped, which is unlikely.

A bulk transfer is basically a single enormous transaction, so the extra bandwidth used by transactional mode for a bulk transfer can be as little as one packet per file. However, interactive traffic, such as key presses or mouse movements, has small transactions. A transaction might consist of a single undersized packet. Sending such packets twice has a modest bandwidth requirement. In effect, transactional mode provides forward error correction (FEC) on interactive traffic and gives end-of-transaction RTO protection to other traffic.

Share

Share

In this article

This Preview product documentation is Cloud Software Group Confidential.

You agree to hold this documentation confidential pursuant to the terms of your Cloud Software Group Beta/Tech Preview Agreement.

The development, release and timing of any features or functionality described in the Preview documentation remains at our sole discretion and are subject to change without notice or consultation.

The documentation is for informational purposes only and is not a commitment, promise or legal obligation to deliver any material, code or functionality and should not be relied upon in making Cloud Software Group product purchase decisions.

If you do not agree, select I DO NOT AGREE to exit.