Dynamic Routing support in NetScaler CPX

NetScaler CPX supports the BGP dynamic routing protocol. The key objective of the dynamic routing protocol is to advertise the virtual server’s IP address based on the health of the services, bound to the virtual server. It helps an upstream router to choose the best among multiple routes to a topographically distributed virtual server.

For information about the non-default password in NetScaler CPX, see the Support for using a non-default password in NetScaler CPX section in the Configuring NetScaler CPX document.

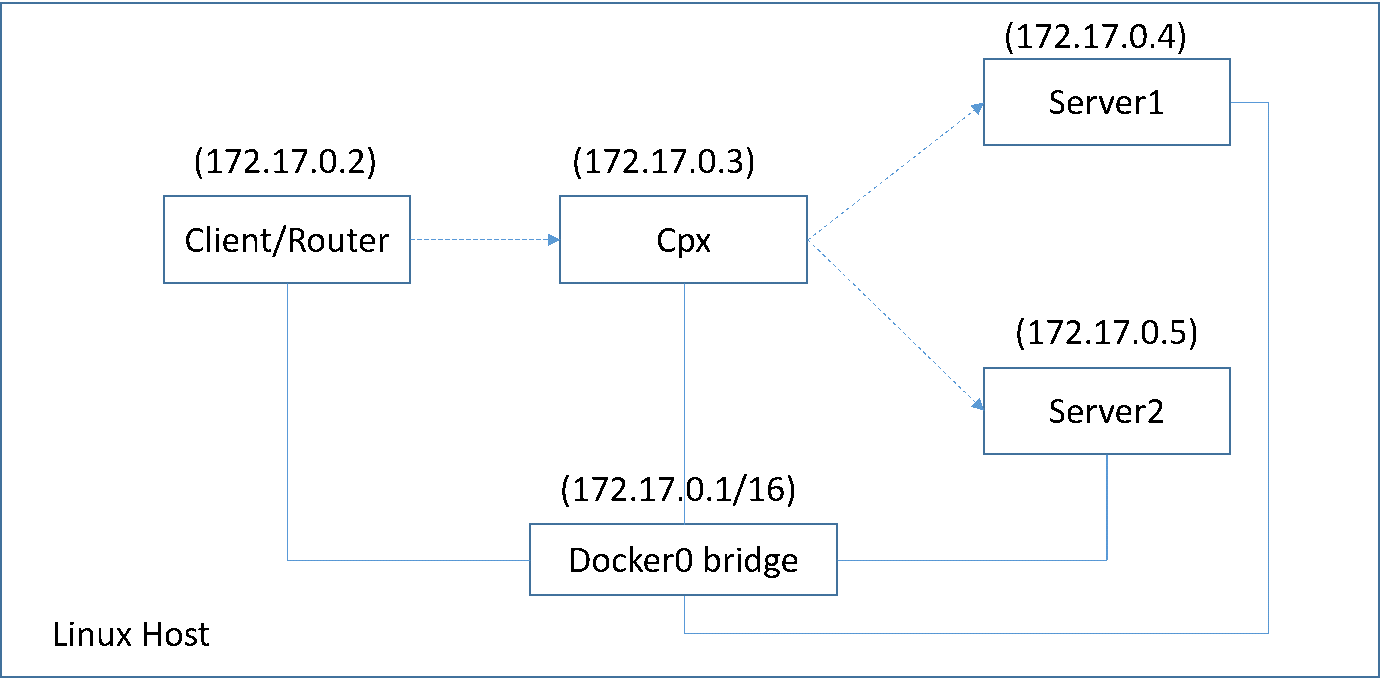

In a single host network, the client, the servers, and the NetScaler CPX instance are deployed as containers on the same Docker host. All the containers are connected through the docker0 bridge. In this environment, the NetScaler CPX instance acts as a proxy for the applications provisioned as containers on the same Docker host. For information about NetScaler CPX host networking mode deployment, see Host networking mode.

The following figure illustrates the single host topology.

In this topology, virtual servers are configured and advertised (based on the health of services) to the upstream network or router using BGP.

Perform the following steps to configure BGP on NetScaler CPX in single Docker host with the bridge-networking mode.

Configure BGP based Route Health Injection using REST API on NetScaler CPX

-

Create a container from the NetScaler CPX image using the following command:

docker run -dt --privileged=true -p 22 -p 80 -p 161 -e EULA=yes --ulimit core=-1 cpx: <tag>For example:

docker run -dt --privileged=true -p 22 -p 80 -p 161 -e EULA=yes --ulimit core=-1 cpx:12.1-50.16 -

Log in to the container using the following command:

docker exec -it <container id> bash -

Enable the BGP feature using the following command:

cli_script.sh "enable ns feature bgp" -

Obtain the NSIP using the

show ns ipcommand:cli_script.sh "show ns ip" -

Add the virtual server using the following command:

cli_script.sh "add lb vserver <vserver_name> http <VIP> <PORT>" -

Add services and bind services to the virtual server.

-

Enable

hostroutefor the VIP using the following command:cli_script.sh "set ns ip <VIP> -hostroute enabled”Log out from the container and send BGP NITRO commands from the host to the NSIP on the port 9080.

-

Configure the BGP router:

For example, if you want to configure:

router bgp 100 Neighbour 172.17.0.2 remote-as 101 Redistribute kernelSpecify the command as the following:

curl -u username:password http://<NSIP>:9080/nitro/v1/config/ -X POST --data 'object={"routerDynamicRouting": {"bgpRouter" : {"localAS":100, "neighbor": [{ "address": "172.17.0.2", "remoteAS": 101}], "afParams":{"addressFamily": "ipv4", "redistribute": {"protocol": "kernel"}}}}}' -

Install the learnt BGP routes into the PE using the following NITRO command:

curl -u username:password http://<NSIP>:9080/nitro/v1/config/ --data 'object={"params":{"action":"apply"},"routerDynamicRouting": {"commandstring" : "ns route-install bgp"}}' -

Verify the BGP adjacency state using the following NITRO command:

curl -u username:password http://<NSIP>:9080/nitro/v1/config/routerDynamicRouting/bgpRouterSample output:

root@ubuntu:~# curl -u username:password http://172.17.0.3:9080/nitro/v1/config/routerDynamicRouting/bgpRouter { "errorcode": 0, "message": "Done", "severity": "NONE", "routerDynamicRouting":{"bgpRouter":[{ "localAS": 100, "routerId": "172.17.0.3", "afParams": [ { "addressFamily": "ipv4" }, { "addressFamily": "ipv6" } ], "neighbor": [ { "address": "172.17.0.2", "remoteAS": 101, "ASOriginationInterval": 15, "advertisementInterval": 30, "holdTimer": 90, "keepaliveTimer": 30, "state": "Connect", "singlehopBfd": false, "multihopBfd": false, "afParams": [ { "addressFamily": "ipv4", "activate": true }, { "addressFamily": "ipv6", "activate": false } ] -

Verify that the routes learnt through BGP are installed in the packet engine with the following command:

cli_script.sh “show route” -

Save the configuration using the following command:

cli_script.sh “save config”

The dynamic routing configuration is saved in the /nsconfig/ZebOS.conf file.