NetScaler Observability Exporter with Kafka as endpoint

NetScaler Observability Exporter is a container that collects metrics and transactions from NetScaler. It also transforms the data into the formats (such as AVRO) that are supported in Kafka and exports the data to the endpoint. Kafka is an open-source and distributed event streaming platform for high-performance data pipelines and streaming analytics.

Note:

NetScaler uses v0 version of Kafka APIs and they are supported up to Kafka version 3.X. Kafka version 4.X is not supported.

Deploy NetScaler Observability Exporter

You can deploy NetScaler Observability Exporter using the YAML file. Based on the NetScaler deployment, you can use NetScaler Observability Exporter to export metrics and transaction data from NetScaler. You can deploy NetScaler CPX either as a pod inside the Kubernetes cluster or on NetScaler MPX or VPX form factor outside the cluster.

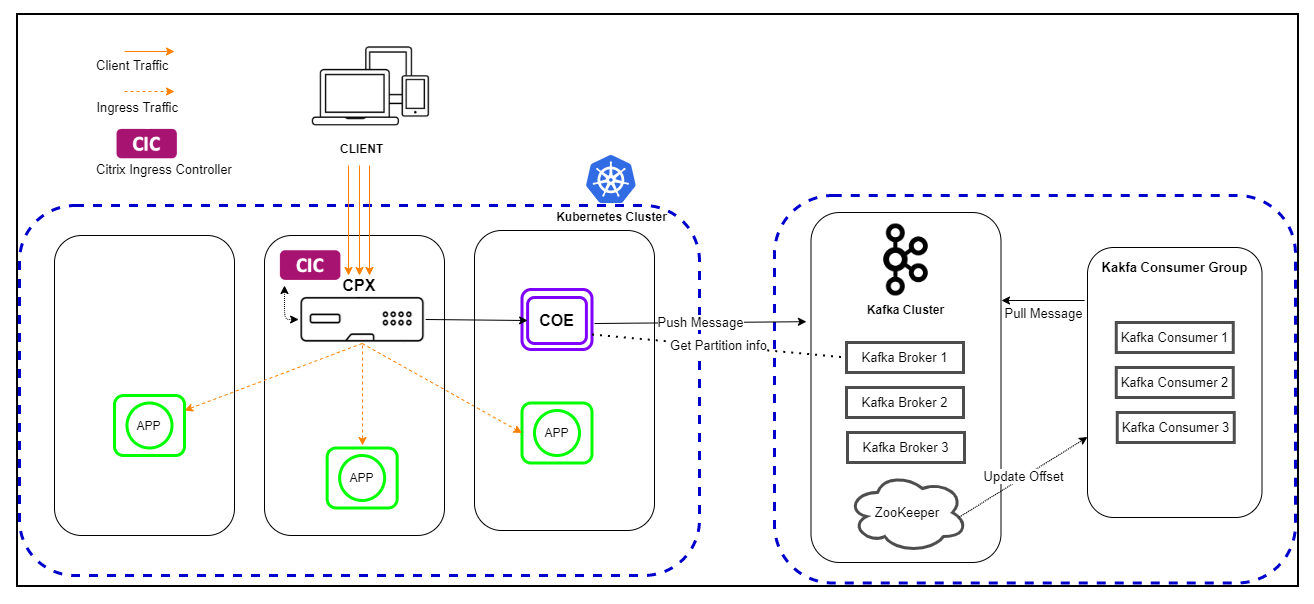

The following diagram illustrates a NetScaler as an Ingress Gateway with NetScaler Observability Exporter as a sidecar. It sends NetScaler application transaction data to Kafka.

Prerequisites

- Ensure that you have a Kubernetes cluster with

kube-dnsorCoreDNSaddon enabled. - Ensure that the Kafka server is installed and configured.

- You must have a Kafka broker IP or FQDN address.

- You must have defined a Kafka topic HTTP.

- Ensure that you have Kafka Consumer to verify the data.

Note:

In this example scenario, the YAML file is used to deploy NetScaler Observability Exporter in the Kubernetes defauIt namespace. If you want to deploy in a private Kubernetes namespace other than default, edit the YAML file to specify the namespace.

The following is a sample application deployment procdure.

Note:

If you have a pre-deployed web application, skip the step 1 and 2.

-

Create a secret ingress.crt and key ingress.key using your own certificate and key.

In this example, a secret, called ing in the default namespace, is created.

kubectl create secret tls ing --cert=ingress.crt --key=ingress.key -

Access the YAML file from webserver-kafka.yaml to deploy a sample application.

kubectl create -f webserver-kafka.yaml -

Define the specific parameters that you must import by specifying it in the ingress annotations of the application’s YAML file using the smart annotations in ingress.

ingress.citrix.com/analyticsprofile: '{"webinsight": {"httpurl":"ENABLED", "httpuseragent":"ENABLED", "httpHost":"ENABLED","httpMethod":"ENABLED","httpContentType":"ENABLED"}}'Note:

The parameters are predefined in the

webserver-kafka.yamlfile.For more information about Annotations, see Ingress annotations documentation.

Deploy NetScaler CPX with the NetScaler Observability Exporter support

You can deploy NetScaler CPX as a side car with the NetScaler Observability Exporter support. You can edit the NetScaler CPX YAML file, cpx-ingress-kafka.yaml, to include the configuration information that is required for NetScaler Observability Exporter support.

Perform the following steps to deploy a NetScaler CPX instance with the NetScaler Observability Exporter support:

-

Download the cpx-ingress-kafka.yaml and the cic-configmap.yaml files.

-

Create a ConfigMap with the required key-value pairs and deploy the ConfigMap. You can use the

cic-configmap.yamlfile that is available, for the specific endpoint, in the directory. -

Modify NetScaler CPX related parameters, as required.

-

Edit the

cic-configmap.yamlfile and specify the following variables for NetScaler Observability Exporter in theNS_ANALYTICS_CONFIGendpoint configuration.server: 'coe-kafka.default.svc.cluster.local' # COE service FQDN -

Deploy NetScaler CPX with the NetScaler Observability Exporter support using the following commands:

kubectl create -f cpx-ingress-kafka.yaml kubectl create -f cic-configmap.yaml

Note:

If you have used a different namespace, other than default, then you must change from

coe-kafka.default.svc.cluster.localtocoe-kafka.<desired-namespace>.svc.cluster.local.

Deploy NetScaler Observability Exporter using YAML

You can deploy NetScaler Observability Exporter using the YAML file. Download the coe-kafka.yaml file that you can use for the NetScaler Observability Exporter deployment.

To deploy NetScaler Observability Exporter using the Kubernetes YAML, run the following command in the Kafka endpoint:

kubectl create -f coe-kafka.yaml

To edit the YAML file for the required changes, perform the following steps:

-

Edit the ConfigMap using the following YAML definition:

Note:

Ensure that you specify the bootstrap Kafka broker addresses (separated by comma) and the desired Kafka topic.

apiVersion: v1 kind: ConfigMap metadata: name: coe-config-kafka data: lstreamd\_default.conf: | { "Endpoints": { "KAFKA": { "ServerUrl": "X.X.X.X:9092,Y.Y.Y.Y:9092", #Specify the bootstrap Kafka broker addresses (separated by comma in case multiple bootstrap brokers are to be configured) "KafkaTopic": "HTTP", #Specify the desired kafka topic "RecordType": { "HTTP": "all", "TCP": "all", "SWG": "all", "VPN": "all", "NGS": "all", "ICA®": "all", "APPFW": "none", "BOT": "none", "VIDEOOPT": "none", "BURST_CQA": "none", "SLA": "none", "MONGO": "none" }, "ProcessAlways": "yes", "FileSizeMax": "40", "ProcessYieldTimeOut": "500", "FileStorageLimit": "1000", "SkipAvro": "no", "AvroCompress": "yes" } } } **Note:** To export transactions in the JSON format, see [exporting transaction in JSON format to Kafka](#support-for-exporting-transactions-in-the-json-format-from-pageadc-observability-exporter-short-to-kafka). -

Specify the host name and IP or FQDN address of the Kafka nodes. Use the following YAML definition for a three node Kafka cluster:

apiVersion: apps/v1 kind: Deployment metadata: name: coe-kafka labels: app: coe-kafka spec: replicas: 1 selector: matchLabels: app: coe-kafka template: metadata: name: coe-kafka labels: app: coe-kafka spec: hostAliases: - ip: "X.X.X.X" # Here we specify kafka node1 Ipaddress hostnames: - "kafka-node1" - ip: "Y.Y.Y.Y" # Here we specify kafka node2 Ipaddress hostnames: - "kafka-node2" - ip: "Z.Z.Z.Z" # Here we specify kafka node3 Ipaddress hostnames: - "kafka-node3" containers: - name: coe-kafka image: "quay.io/citrix/citrix-observability-exporter:1.3.001" imagePullPolicy: Always ports: - containerPort: 5557 name: lstream volumeMounts: - name: lstreamd-config-kafka mountPath: /var/logproxy/lstreamd/conf/lstreamd_default.conf subPath: lstreamd_default.conf - name: core-data mountPath: /var/crash/ volumes: - name: lstreamd-config-kafka configMap: name: coe-config-kafka - name: core-data emptyDir: {} -

If necessary, edit the service configuration for exposing the NetScaler Observability Exporter port to NetScaler using the following YAML definition:

Citrix-observability-exporter headless service:

apiVersion: v1 kind: Service metadata: name: coe-kafka labels: app: coe-kafka spec: clusterIP: None ports: - port: 5557 protocol: TCP selector: app: coe-kafka <!--NeedCopy-->Citrix-observability-exporter NodePort service

apiVersion: v1 kind: Service metadata: name: coe-kafka-nodeport labels: app: coe-kafka spec: type: NodePort ports: - port: 5557 protocol: TCP selector: app: coe-kafka <!--NeedCopy-->

Verify the NetScaler Observability Exporter deployment

To verify the NetScaler Observability Exporter deployment, perform the following:

-

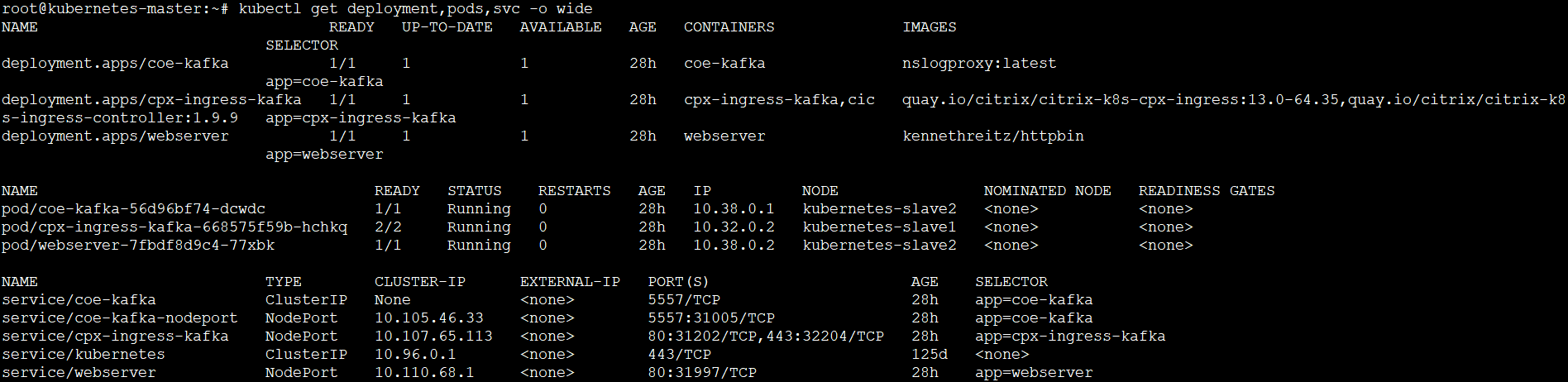

Verify the deployment using the following command:

kubectl get deployment,pods,svc -o wide

-

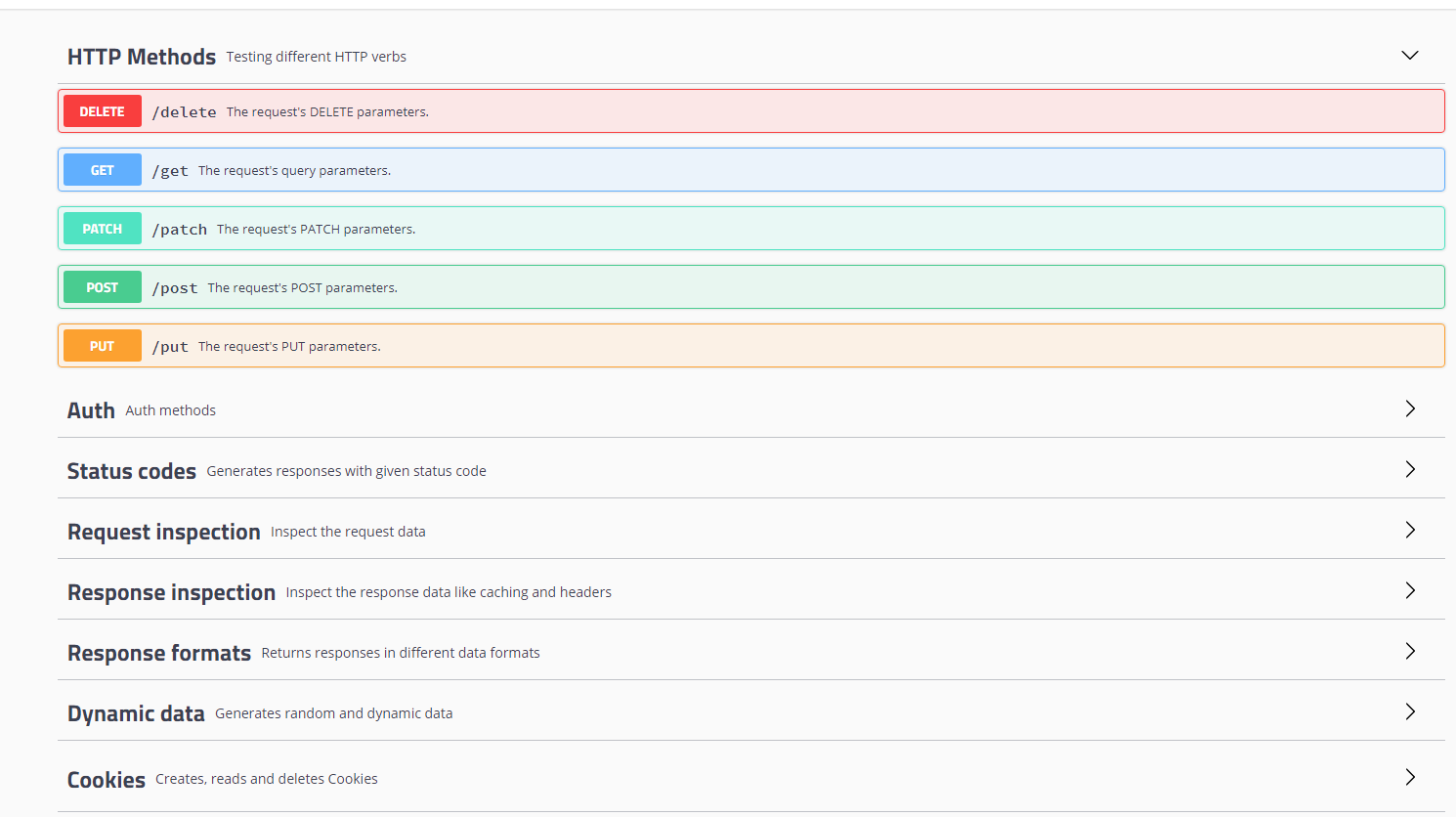

Access the application with a browser using the URL:

https://<kubernetes-node-IP>:<cpx-ingress-kafka nodeport>.For example, from step 1, access <http://10.102.61.56:31202/> in which, `10.102.61.56` is one of the Kubernetes node IPs.

-

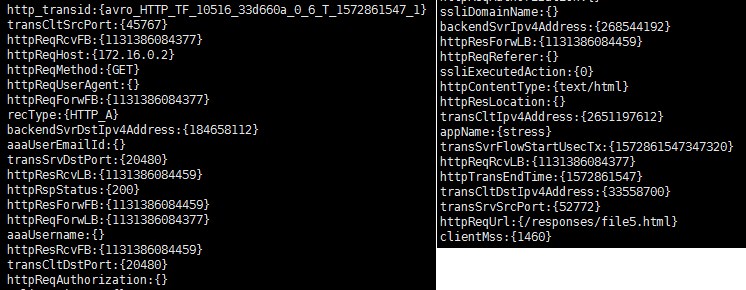

Use Kafka Consumer to view the transaction data. Access kafka Consumer from PythonKafkaConsumer.

The following image shows sample data from Kafka Consumer.

Integrate NetScaler with multiple NetScaler Observability Exporter instances manually

You can configure NetScaler Observability Exporter manually in NetScaler. Manual configuration is suitable for NetScaler in MPX and VPX form factors. Citrix recommends deploying NetScaler Observability Exporter in the automated way using the YAML file as described in the preceding sections.

For information about deploying NetScaler Observability Exporter (coe-kafka.yaml) and web application (webserver-kafka.yaml), see the preceding sections.

enable feature appflow

enable ns mode ULFD

add service COE_svc1 <COE IP1> LOGSTREAM <COE PORT1>

add service COE_svc2 <COE IP2> LOGSTREAM <COE PORT2>

add service COE_svc3 <COE IP3> LOGSTREAM <COE PORT3>

add lb vserver COE LOGSTREAM 0.0.0.0 0

bind lb vserver COE COE_svc1

bind lb vserver COE COE_svc2

bind lb vserver COE COE_svc3

add analytics profile web_profile -collectors COE -type webinsight -httpURL ENABLED -httpHost ENABLED -httpMethod ENABLED -httpUserAgent ENABLED -httpContentType ENABLED

add analytics profile tcp_profile -collectors COE -type tcpinsight

bind lb/cs vserver <WEB-PROXY> -analyticsProfile web_profile

bind lb/cs vserver <WEB-PROXY> -analyticsProfile tcp_profile

# To enable metrics push to prometheus

add service metrichost_SVC <IP> HTTP <PORT>

set analyticsprofile ns_analytics_time_series_profile -collectors metrichost_SVC -metrics ENABLED -outputMode prometheus

<!--NeedCopy-->

Add NetScaler Observability Exporter using FQDN

enable feature appflow

enable ns mode ULFD

add dns nameserver <KUBE-CoreDNS>

add server COEsvr <FQDN>

add servicegroup COEsvcgrp LOGSTREAM -autoScale DNS

bind servicegroup COEsvcgrp COEsvr <PORT>

add lb vserver COE LOGSTREAM 0.0.0.0 0

bind lb vserver COE COEsvcgrp

add analytics profile web_profile -collectors COE -type webinsight -httpURL ENABLED -httpHost ENABLED -httpMethod ENABLED -httpUserAgent ENABLED -httpContentType ENABLED

add analytics profile tcp_profile -collectors COE -type tcpinsight

bind lb vserver <WEB-VSERVER> -analyticsProfile web_profile

bind lb vserver <WEB-VSERVER> -analyticsProfile tcp_profile

# To enable metrics push to prometheus

add service metrichost_SVC <IP> HTTP <PORT>

set analyticsprofile ns_analytics_time_series_profile -collectors metrichost_SVC -metrics ENABLED -outputMode prometheus

<!--NeedCopy-->

For information on troubleshooting related to NetScaler Observability Exporter, see NetScaler CPX troubleshooting.

Support for exporting transactions in the JSON format from NetScaler Observability Exporter to Kafka

You can now export transactions from NetScaler Observability Exporter to Kafka in the JSON format apart from the AVRO format.

A new parameter DataFormat is introduced in the Kafka deployment ConfigMap to support transactions in the JSON format.

This parameter can accept AVRO and JSON values. For allowing JSON based transactions, set the value of

DataFormat as JSON in the

coe-kafka.yaml file. The default value is AVRO.

The following example shows the YAML file with the data format configured as JSON.

apiVersion: v1

kind: ConfigMap

metadata:

name: coe-config-kafka

data:

lstreamd_default.conf: |

{

"Endpoints": {

"KAFKA": {

"DataFormat": "JSON",

"ServerUrl": "X.X.X.X:9092,Y.Y.Y.Y:9092", #Specify the bootstrap Kafka broker addresses (separated by comma in case multiple bootstrap brokers are to be configured)

"KafkaTopic": "HTTP", #Specify the desired kafka topic

"RecordType": {

"HTTP": "all",

"TCP": "all",

"SWG": "all",

"VPN": "all",

"NGS": "all",

"ICA": "all",

"APPFW": "none",

"BOT": "none",

"VIDEOOPT": "none",

"BURST_CQA": "none",

"SLA": "none",

"MONGO": "none"

},

"TimeSeries": {

"EVENTS": "yes",

"AUDITLOGS": "yes"

}

}

}

}

<!--NeedCopy-->

In this article

- Deploy NetScaler Observability Exporter

- Deploy NetScaler CPX with the NetScaler Observability Exporter support

- Deploy NetScaler Observability Exporter using YAML

- Verify the NetScaler Observability Exporter deployment

- Integrate NetScaler with multiple NetScaler Observability Exporter instances manually

- Support for exporting transactions in the JSON format from NetScaler Observability Exporter to Kafka