This content has been machine translated dynamically.

Dieser Inhalt ist eine maschinelle Übersetzung, die dynamisch erstellt wurde. (Haftungsausschluss)

Cet article a été traduit automatiquement de manière dynamique. (Clause de non responsabilité)

Este artículo lo ha traducido una máquina de forma dinámica. (Aviso legal)

此内容已经过机器动态翻译。 放弃

このコンテンツは動的に機械翻訳されています。免責事項

이 콘텐츠는 동적으로 기계 번역되었습니다. 책임 부인

Este texto foi traduzido automaticamente. (Aviso legal)

Questo contenuto è stato tradotto dinamicamente con traduzione automatica.(Esclusione di responsabilità))

This article has been machine translated.

Dieser Artikel wurde maschinell übersetzt. (Haftungsausschluss)

Ce article a été traduit automatiquement. (Clause de non responsabilité)

Este artículo ha sido traducido automáticamente. (Aviso legal)

この記事は機械翻訳されています.免責事項

이 기사는 기계 번역되었습니다.책임 부인

Este artigo foi traduzido automaticamente.(Aviso legal)

这篇文章已经过机器翻译.放弃

Questo articolo è stato tradotto automaticamente.(Esclusione di responsabilità))

Translation failed!

Weighted fair queuing

In any link, the bottleneck gateway determines the queuing discipline, because data in the non-bottleneck gateways does not back up. Without pending data in the queues, the queuing protocol is irrelevant.

Most IP networks use deep FIFO queues. If traffic arrives faster than the bottleneck speed, the queues fill up and all packets suffer increased queuing times. Sometimes the traffic is divided into a few different classes with separate FIFOs, but the problem remains. A single connection sending too much data can cause large delays, packet losses, or both for all the other connections in its class.

A Citrix SD-WAN WANOP appliance uses weighted fair queuing, which provides a separate queue for each connection. With fair queuing, a too-fast connection can overflow only its own queue. It has no effect on other connections. But because of lossless flow control, there is no such thing as a too-fast connection, and queues do not overflow.

The result is that each connection has its traffic metered into the link in a fair manner, and the link as a whole has an optimal bandwidth and latency profile.

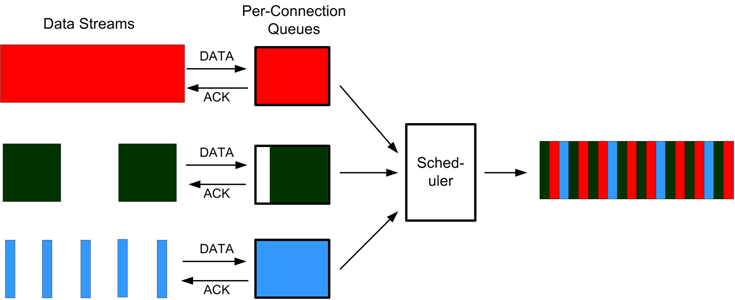

The following figure shows the effect of fair queuing. A connection that requires less than its fair share of bandwidth (the bottom connection) gets as much bandwidth as it attempts to use. In addition, it has very little queuing latency. Connections that attempt to use more than their fair share get their fair share, plus any bandwidth left over from connections that use less than their fair share.

Figure 1. Fair Queuing in Action

The optimal latency profile provides users of interactive and transactional applications with ideal performance, even when they are sharing the link with multiple bulk transfers. The combination of lossless, transparent flow control and fair queuing enables you to combine all kinds of traffic over the same link safely and transparently.

The difference between weighted fair queuing and unweighted fair queuing is that weighted fair queuing includes the option of giving some traffic a higher priority (weight) than others. Traffic with a weight of two receives twice the bandwidth of traffic with a weight of one. In a Citrix SD-WAN WANOP configuration, the weights are assigned in traffic-shaping policies.

Share

Share

In this article

This Preview product documentation is Cloud Software Group Confidential.

You agree to hold this documentation confidential pursuant to the terms of your Cloud Software Group Beta/Tech Preview Agreement.

The development, release and timing of any features or functionality described in the Preview documentation remains at our sole discretion and are subject to change without notice or consultation.

The documentation is for informational purposes only and is not a commitment, promise or legal obligation to deliver any material, code or functionality and should not be relied upon in making Cloud Software Group product purchase decisions.

If you do not agree, select I DO NOT AGREE to exit.