Provision the NetScaler VPX instance with SR-IOV, on OpenStack

You can deploy high-performance NetScaler VPX instances that use single-root I/O virtualization (SR-IOV) technology, on OpenStack.

You can deploy a NetScaler VPX instance that uses SR-IOV technology, on OpenStack, in three steps:

- Enable SR-IOV Virtual Functions (VFs) on the host.

- Configure and make the VFs available to OpenStack.

- Provision the NetScaler VPX on OpenStack.

Prerequisites

Ensure that you:

- Add the Intel 82599 NIC (NIC) to the host.

- Download and Install the latest IXGBE driver from Intel.

- Block list the IXGBEVF driver on the host. Add the following entry in the /etc/modprobe.d/blacklist.conf file: Block list

ixgbevf

Note:

The

ixgbedriver version must be minimum 5.0.4.

Enable SR-IOV VFs on the host

Do one of the following steps to enable SR-IOV VFs:

-

If you are using a kernel version earlier than 3.8, add the following entry to the /etc/modprobe.d/ixgbe file and restart the host: options ixgbe max_vfs=<number_of_VFs>

-

If you are using kernel 3.8 version or later, create VFs by using the following command:

echo <number_of_VFs> > /sys/class/net/<device_name>/device/sriov_numvfs

Where:

- number_of_VFs is the number of Virtual Functions that you want to create.

- device_name is the interface name.

Important:

While you are creating the SR-IOV VFs, make sure that you do not assign MAC addresses to the VFs.

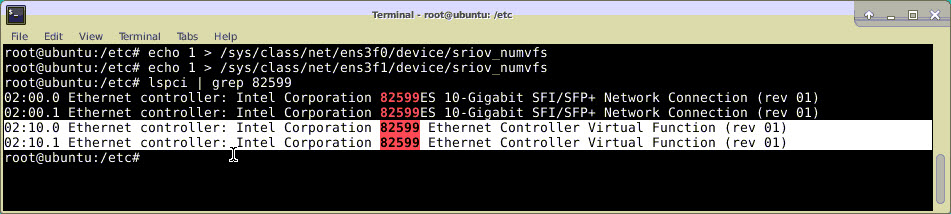

Here is an example of four VFs being created.

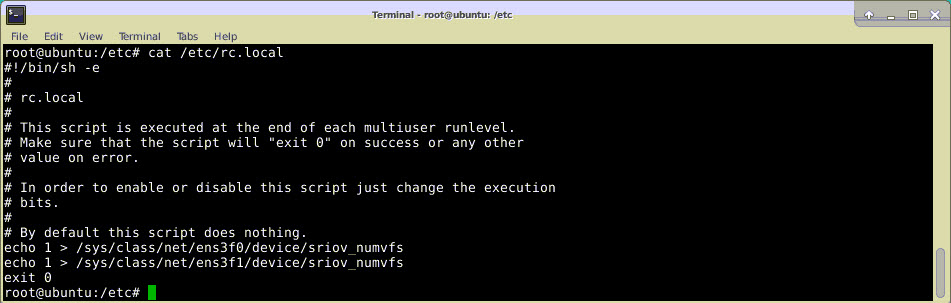

Make the VFs persistent, add the commands that you used to created VFs to the rc.local file. Here is an example showing content of rc.local file.

For more information, see this Intel SR-IOV Configuration Guide.

Configure and make the VFs available to OpenStack

Follow the steps given at the link below to configure SR-IOV on OpenStack: https://wiki.openstack.org/wiki/SR-IOV-Passthrough-For-Networking.

Provision the NetScaler VPX instance on OpenStack

You can provision a NetScaler VPX instance in an OpenStack environment by using the OpenStack CLI.

Provisioning a VPX instance, optionally involves using data from the config drive. The config drive is a special configuration drive that attaches to the instance when it boots. This configuration drive can be used to pass networking configuration information such as management IP address, network mask, and default gateway and so on to the instance before you configure the network settings for the instance.

When OpenStack provisions a VPX instance, it first detects that the instance is booting in an OpenStack environment, by reading a specific BIOS string (OpenStack Foundation) that indicates OpenStack. For Red Hat Linux distributions, the string is stored in /etc/nova/release. This is a standard mechanism that is available in all OpenStack implementations based on KVM hyper-visor platform. The drive must have a specific OpenStack label. If the config drive is detected, the instance attempts to read the following information from the file name specified in the nova boot command. In the procedures below, the file is called “userdata.txt.”

- Management IP address

- Network mask

- Default gateway

Once the parameters are successfully read, they are populated in the NetScaler stack. This helps in managing the instance remotely. If the parameters are not read successfully or the config drive is not available, the instance transitions to the default behavior, which is:

- The instance attempts to retrieve the IP address information from DHCP.

- If DHCP fails or times-out, the instance comes up with default network configuration (192.168.100.1/16).

Provision the NetScaler VPX instance on OpenStack through CLI

You can provision a VPX instance in an OpenStack environment by using the OpenStack CLI. Here’s the summary of the steps to provision a NetScaler VPX instance on OpenStack:

-

Extracting the

.qcow2file from the .tgz file -

Building an OpenStack image from the qcow2 image

-

Provisioning a VPX instance

To provision a VPX instance in an OpenStack environment, do the following steps.

-

Extract the.

qcow2file from the.tqzfile by typing the command:tar xvzf <TAR file> tar xvzf NSVPX-KVM-12.0-26.2_nc.tgz NSVPX-KVM.xml NSVPX-KVM-12.0-26.2_nc.qcow2 -

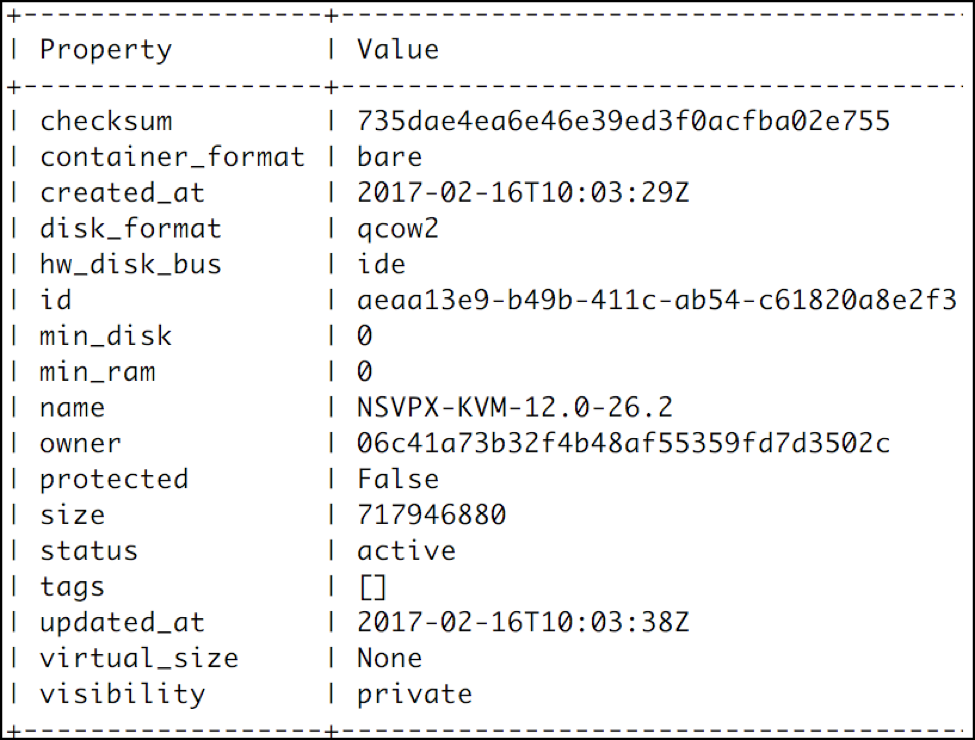

Build an OpenStack image using the

.qcoz2file extracted in step 1 by typing the following command:glance image-create --name="<name of the OpenStack image>" --property hw_disk_bus=ide --is-public=true --container-format=bare --disk-format=qcow2< <name of the qcow2 file> glance image-create --name="NS-VPX-12-0-26-2" --property hw_disk_bus=ide --is-public= true --container-format=bare --disk-format=qcow2< NSVPX-KVM-12.0-26.2_nc.qcow2The following illustration provides a sample output for the glance image-create command.

-

After an OpenStack image is created, provision the NetScaler VPX instance.

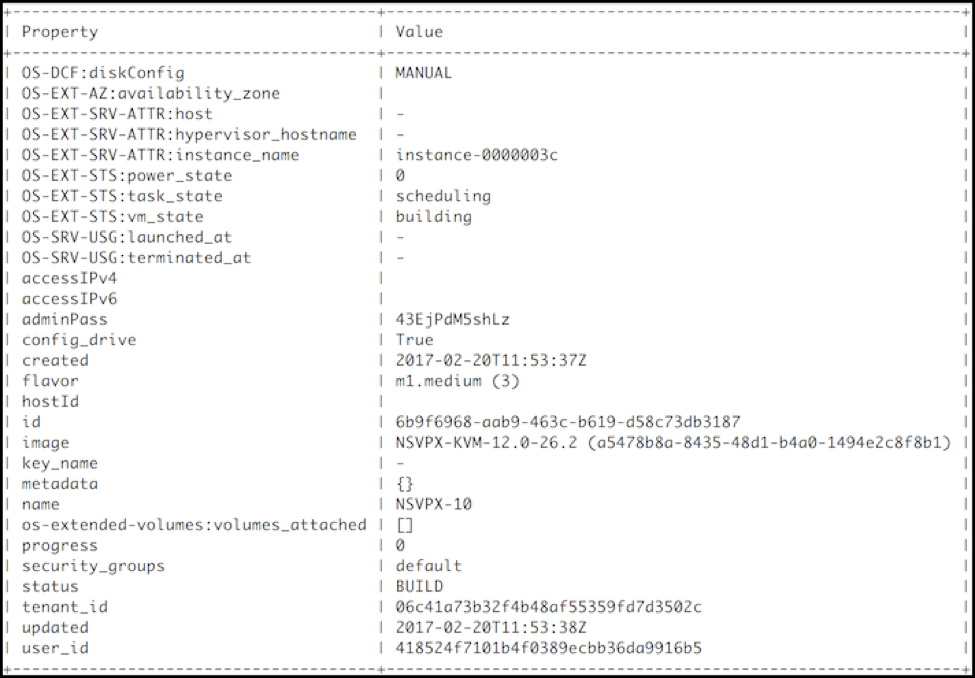

nova boot --image NSVPX-KVM-12.0-26.2 --config-drive=true --userdata ./userdata.txt --flavor m1. medium --nic net-id=3b258725-eaae- 455e-a5de-371d6d1f349f --nic port-id=218ba819-9f55-4991-adb6- 02086a6bdee2 NSVPX-10In the preceding command, userdata.txt is the file which contains the details like, IP address, netmask, and default gateway for the VPX instance. The user data file is a user customizable file. NSVPX-KVM-12.0-26.2 is the name of the virtual appliance that you want to provision. –NIC port-id=218ba819-9f55-4991-adb6-02086a6bdee2 is the OpenStack VF.

The following illustration gives a sample output of the

novaboot command.

The following illustration shows a sample of the userdata.txt file. The values within the <PropertySection></PropertySection> tags are the values which are user configurable and holds the information like, IP address, netmask, and default gateway.

<?xml version="1.0" encoding="UTF-8" standalone="no"?> <Environment xmlns:oe="http://schemas.dmtf.org/ovf/environment/1" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" oe:id="" xmlns="http://schemas.dmtf.org/ovf/environment/1"> <PlatformSection> <Kind>NOVA</Kind> <Version>2013.1</Version> <Vendor>Openstack</Vendor> <Locale>en</Locale> </PlatformSection> <PropertySection> <Property oe:key="com.citrix.netscaler.ovf.version" oe:value="1.0"/> <Property oe:key="com.citrix.netscaler.platform" oe:value="vpx"/> citrix.com 4 <Property oe:key="com.citrix.netscaler.orch_env" oe:value="openstack-orch-env"/> <Property oe:key="com.citrix.netscaler.mgmt.ip" oe:value="10.1.0.100"/> <Property oe:key="com.citrix.netscaler.mgmt.netmask" oe:value="255.255.0.0"/> <Property oe:key="com.citrix.netscaler.mgmt.gateway" oe:value="10.1.0.1"/> </PropertySection> </Environment>

Additional supported Configurations: Creating and Deleting VLANs on SR-IOV VFs from the Host

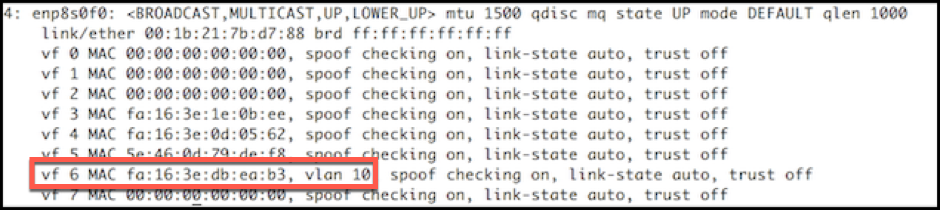

Type the following command to create a VLAN on the SR-IOV VF:

ip link show enp8s0f0 vf 6 vlan 10

In the preceding command “enp8s0f0” is the name of the physical function.

Example: VLAN 10, created on vf 6

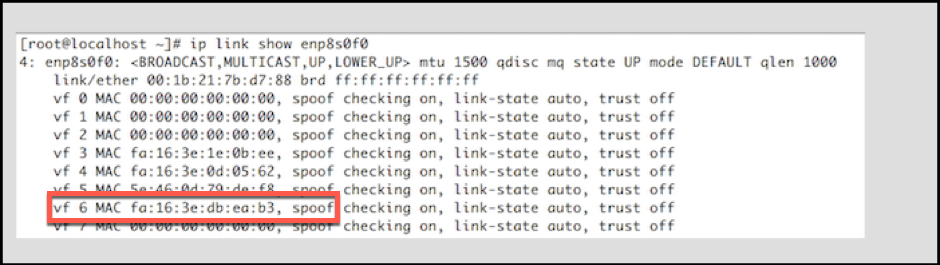

Type the following command to delete a VLAN on the SR-IOV VF:

ip link show enp8s0f0 vf 6 vlan 0

Example: VLAN 10, removed from vf 6

These steps complete the procedure for deploying a NetScaler VPX instance that uses SRIOV technology, on OpenStack.