Configure a NetScaler VPX on the KVM hypervisor to use Intel QAT for SSL acceleration in SR-IOV mode

The NetScaler VPX instance on the Linux KVM hypervisor can use the Intel QuickAssist Technology (QAT) to accelerate the NetScaler SSL performance. Using Intel QAT, all heavy-latency crypto processing can be offloaded to the chip thus freeing up one or more host CPUs to do other tasks.

Previously, all NetScaler® data path crypto processing was done in the software using host vCPUs.

Note:

Currently, NetScaler VPX supports only the C62x chip model under Intel QAT family. This feature is supported starting from NetScaler release 14.1 build 8.50.

Prerequisites

-

The Linux host is equipped with an Intel QAT C62x chip, either integrated directly into the motherboard or added on an external PCI card.

Intel QAT C62x Series Models: C625, C626, C627, C628. Only these C62x models include Public Key Encryption (PKE) capability. Other C62x variants do not support PKE.

-

The NetScaler VPX meets the VMware ESX hardware requirements. For more information, see Install a NetScaler VPX instance on Linux KVM platform.

Limitations

There’s no provision to reserve crypto units or bandwidth for individual VMs. All the available crypto units of any Intel QAT hardware are shared across all VMs using the QAT hardware.

Set up the host environment for using Intel QAT

-

Download and install the Intel-provided driver for the C62x series (QAT) chip model in the Linux host. For more information on the Intel package downloads and installation instructions, see Intel QuickAssist Technology Driver for Linux. A readme file is available as part of the download package. This file provides instructions about compiling and installing the package in the host.

After you download and install the driver, perform the following sanity checks:

-

Note the number of C62x chips. Each C62x chip has up to 3 PCIe endpoints.

-

Make sure that all the endpoints are UP. Run the

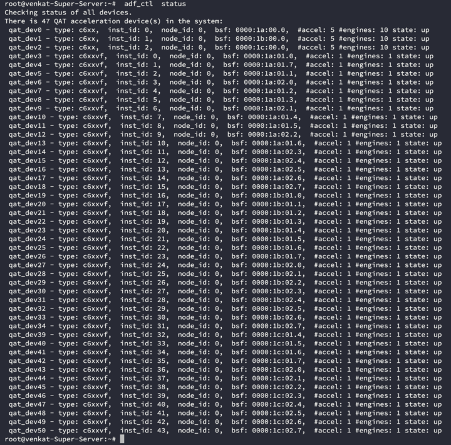

adf_ctl statuscommand to display the status of all the PF endpoints (up to 3).root@Super-Server:~# adf_ctl status Checking status of all devices. There is 51 QAT acceleration device(s) in the system qat_dev0 - type: c6xx, inst_id: 0, node_id: 0, bsf: 0000:1a:00.0, #accel: 5 #engines: 10 state: up qat_dev1 - type: c6xx, inst_id: 1, node_id: 0, bsf: 0000:1b:00.0, #accel: 5 #engines: 10 state: up qat_dev2 - type: c6xx, inst_id: 2, node_id: 0, bsf: 0000:1c:00.0, #accel: 5 #engines: 10 state: up <!--NeedCopy--> -

Enable SRIOV (VF support) for all QAT endpoints.

root@Super-Server:~# echo 1 > /sys/bus/pci/devices/0000\:1a\:00.0/sriov_numvfs root@Super-Server:~# echo 1 > /sys/bus/pci/devices/0000\:1b\:00.0/sriov_numvfs root@Super-Server:~# echo 1 > /sys/bus/pci/devices/0000\:1c\:00.0/sriov_numvfs <!--NeedCopy--> - Make sure that all VFs are displayed (16 VFs per endpoint, totaling to 48 VFs).

-

Run the adf_ctl status command to verify that all the PF endpoints (up to 3) and the VFs of each Intel QAT chip are UP. In this example, the system has only one C62x chip. So, it has 51 endpoints (3 + 48 VFs) in total.

-

- Enable SR-IOV on the Linux host.

- Create virtual machines. When creating a VM, assign the appropriate number of PCI devices to meet the performance requirements.

Note:

Each C62x (QAT) chip can have up to three separate PCI endpoints. Each endpoint is a logical collection of VFs, and shares the bandwidth equally with other PCI endpoints of the chip. Each endpoint can have up to 16 VFs that show up as 16 PCI devices. Add these devices to the VM to do the crypto acceleration using the QAT chip.

Points to note

- If the VM crypto requirement is to use more than one QAT PCI endpoint/chip, we recommend that you pick the corresponding PCI devices/VFs in a round-robin fashion to have a symmetric distribution.

-

We recommend that the number of PCI devices selected is equal to the number of licensed vCPUs (without including the management vCPU count). Adding more PCI devices than the available number of vCPUs does not necessarily improve the performance.

Example:

Consider a Linux host with one Intel C62x chip that has 3 endpoints. While provisioning a VM with 6 vCPUs, pick 2 VFs from each endpoint, and assign them to the VM. This assignment ensures an effective and equal distribution of crypto units for the VM. From the total available vCPUs, by default, one vCPU is reserved for the management plane, and the rest of the vCPUs are available for the data plane PEs.

Assign QAT VFs to NetScaler VPX deployed on Linux KVM hypervisor

-

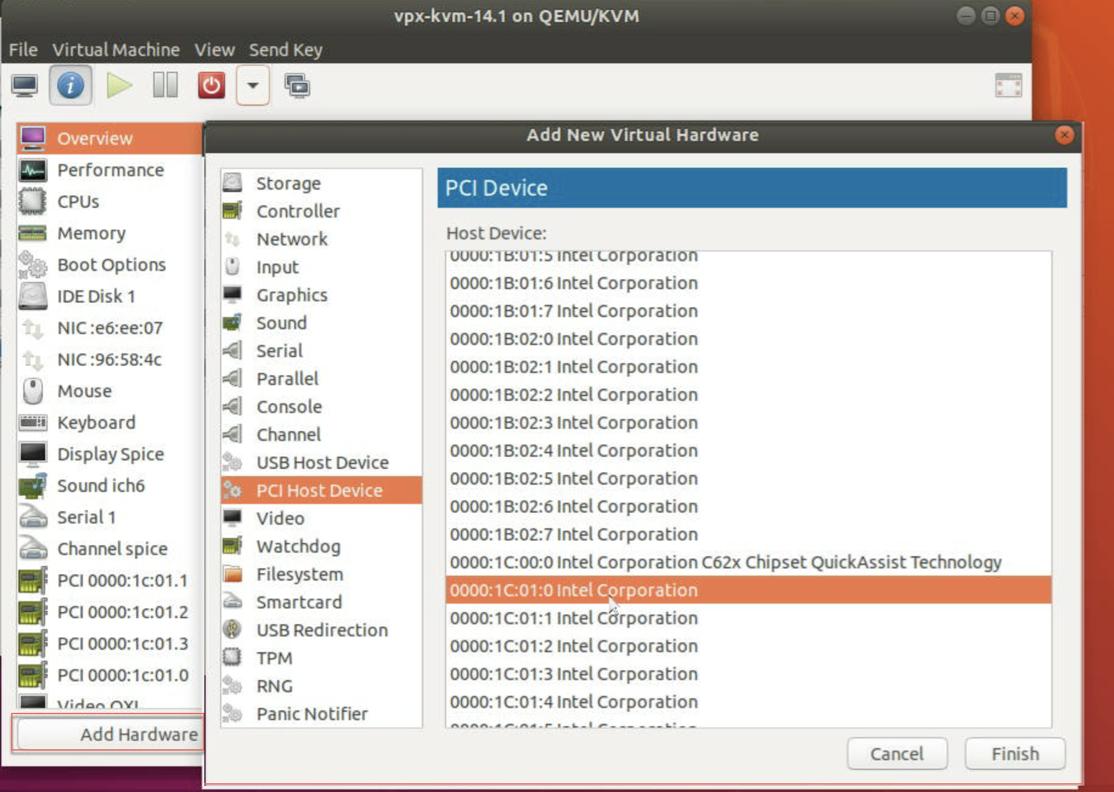

In the Linux KVM virtual machine manager, make sure the VM (NetScaler VPX) is powered off.

-

Navigate to Add hardware > PCI Host Device.

-

Assign Intel QAT VF to the PCI device.

-

Click Finish.

-

Repeat the preceding steps to assign one or more Intel QAT VFs to the NetScaler VPX instance up to the limit of one less than the total number of vCPUs. Because One vCPU is reserved for the management process.

Number of QAT VFs per VM = Number of vCPUs - 1

-

Power on the VM.

-

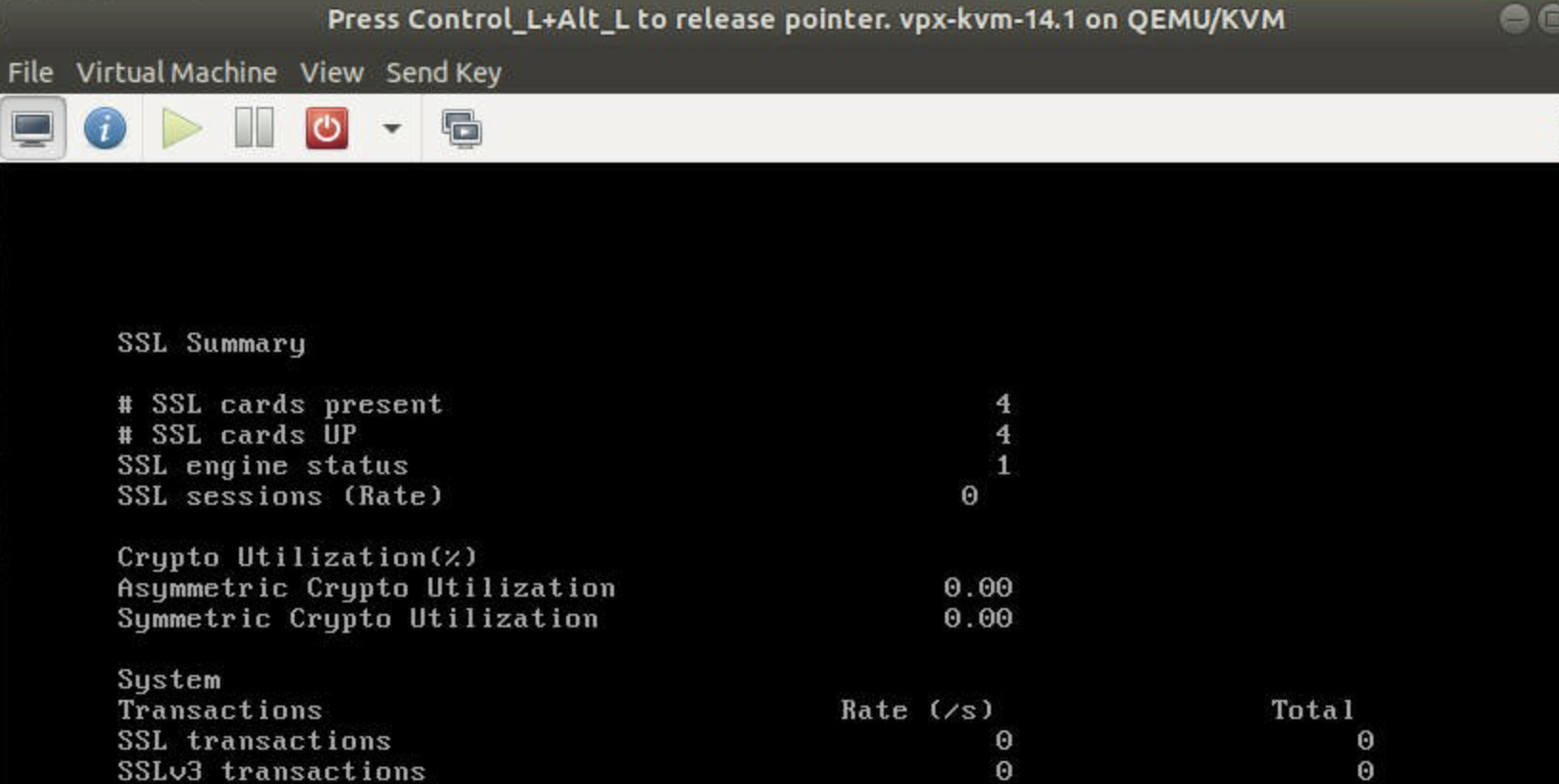

Run the

stat sslcommand in the NetScaler CLI to display the SSL summary, and verify the SSL cards after assigning QAT VFs to NetScaler VPX.In this example, we have used 5 vCPUs, which implies 4 packet engines (PEs).

About the deployment

This deployment was tested with the following component specifications:

- NetScaler VPX Version and Build: 14.1–8.50

- Ubuntu Version: 18.04, Kernel 5.4.0-146

- Intel C62x QAT driver version for Linux: L.4.21.0-00001