Support matrix and usage guidelines

This document lists the different hypervisors and features supported on a Citrix® ADC VPX instance. It also describes their usage guidelines and limitations.

Table 1. VPX instance on Citrix Hypervisor™

| Citrix Hypervisor version | SysID | VPX models |

|---|---|---|

| 7.1 | 450000 | VPX 10, VPX 25, VPX 200, VPX 1000, VPX 3000, VPX 5000, VPX 8000, VPX 10G, VPX 15G, VPX 25G, VPX 40G |

Table 2. VPX instance on VMware ESXi server

The following VPX models with 450010 (Sys ID) supports the VMware ESX versions listed in the table.

VPX models: VPX 10, VPX 25, VPX 200, VPX 1000, VPX 3000, VPX 5000, VPX 8000, VPX 10G, VPX 15G, VPX 25G, VPX 40G, and VPX 100G.

| ESXi version | ESXi release date in (YYYY/MM/DD) format | ESXi build number | Citrix ADC VPX version |

|---|---|---|---|

| ESXi 7.0 update 3m | 2023/05/03 | 21686933 | 12.1-65.x and higher builds |

| ESXi 7.0 update 3f | 2022/07/12 | 20036589 | 12.1-65.x and higher builds |

| ESXi 7.0 update 3d | 2022/03/29 | 19482537 | 12.1-65.x and higher builds |

| ESXi 7.0 update 2d | 2021/09/14 | 18538813 | 12.1-63.x and higher builds |

| ESXi 7.0 update 2a | 2021/04/29 | 17867351 | 12.1-62.x and higher builds |

| ESXi 6.7 P04 | 2020/11/19 | 17167734 | 12.1-55.x and higher builds |

| ESXi 6.7 P03 | 2020/08/20 | 16713306 | 12.1-55.x and higher builds |

| ESXi 6.5 GA | 2016/11/15 | 4564106 | 12.1-55.x and higher builds |

| ESXi 6.5 U1g | 2018/3/20 | 7967591 | 12.1-55.x and higher builds |

Note:

Each ESXi patch support is validated on the Citrix ADC VPX version specified in the preceding table and is applicable for all the higher builds of Citrix VPX 12.1 version.

Table 3. VPX on Microsoft Hyper-V

| Hyper-V version | SysID | VPX models |

|---|---|---|

| 2012, 2012R2 | 450020 | VPX 10, VPX 25, VPX 200, VPX 1000, VPX 3000 |

VPX instance on Nutanix AHV

NetScaler VPX is supported on Nutanix AHV through the Citrix Ready partnership. Citrix Ready is a technology partner program that helps software and hardware vendors develop and integrate their products with NetScaler technology for digital workspace, networking, and analytics.

For more information on a step-by-step method to deploy a NetScaler VPX instance on Nutanix AHV, see Deploying a NetScaler VPX on Nutanix AHV.

Third-party support:

If you experience any issues with a particular third-party (Nutanix AHV) integration on a NetScaler® environment, open a support incident directly with the third-party partner (Nutanix).

If the partner determines that the issue appears to be with NetScaler, the partner can approach NetScaler support for further assistance. A dedicated technical resource from partners works with the NetScaler support until the issue is resolved.

Table 4. VPX instance on generic KVM

| Generic KVM version | SysID | VPX models |

|---|---|---|

| RHEL 7.4, RHEL 7.5 (from Citrix ADC version 12.1 50.x onwards) Ubuntu 16.04 | 450070 | VPX 10, VPX 25, VPX 200, VPX 1000, VPX 3000, VPX 5000, VPX 8000, VPX 10G, VPX 15G. VPX 25G, VPX 40G, VPX 100G |

Note:

The VPX instance is qualified for hypervisor release versions mentioned in table 1–4, and not for patch releases within a version. However, the VPX instance is expected to work seamlessly with patch releases of a supported version. If it does not, log a support case for troubleshooting and debugging.

Table 5. VPX instance on AWS

| AWS version | SysID | VPX models |

|---|---|---|

| N/A | 450040 | VPX 10, VPX 200, VPX 1000, VPX 3000, VPX 5000, VPX 15G, VPX BYOL |

Table 6. VPX instance on Azure

| Azure version | SysID | VPX models |

|---|---|---|

| N/A | 450020 | VPX 10, VPX 200, VPX 1000, VPX 3000, VPX BYOL |

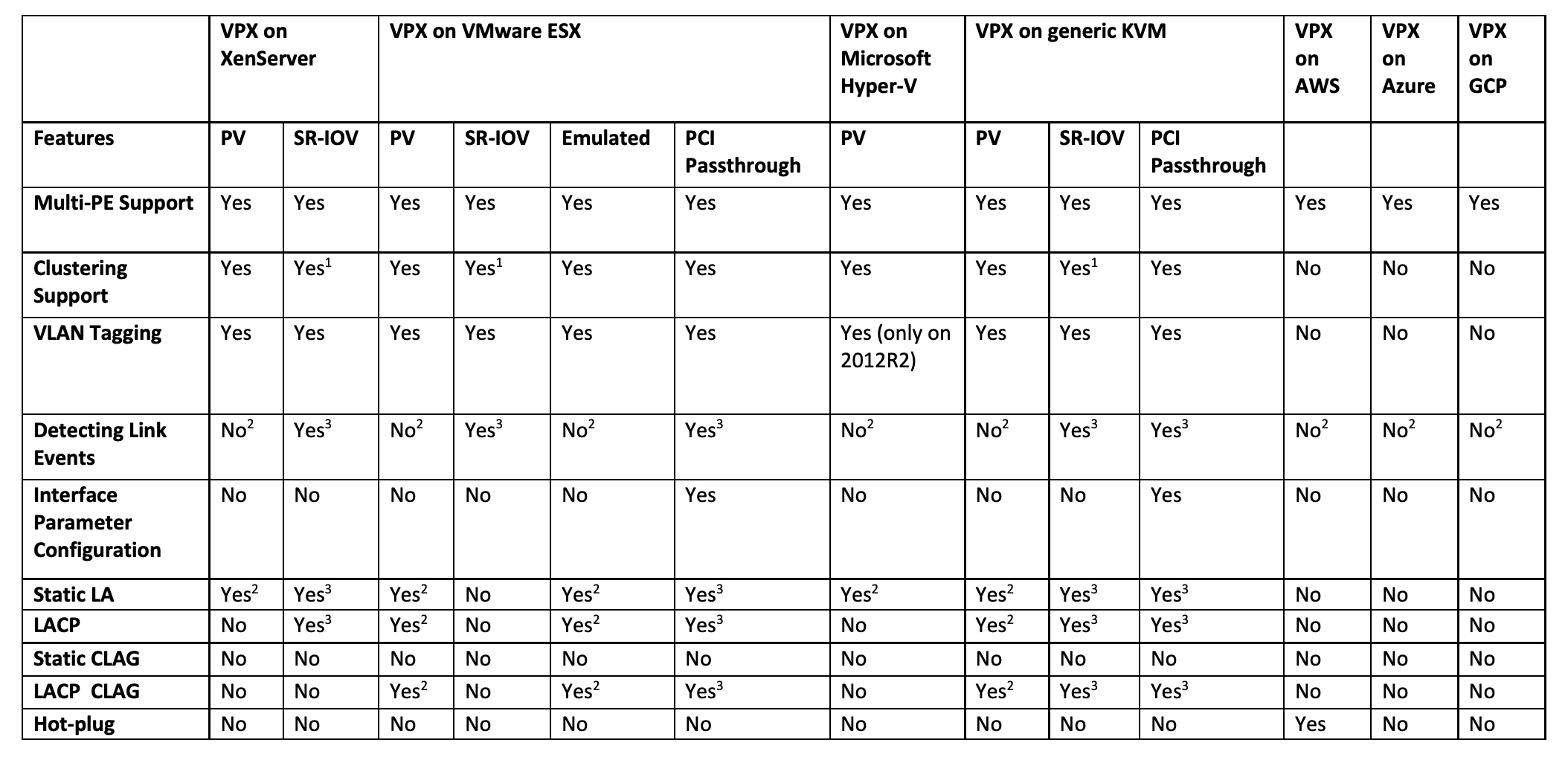

Table 7. VPX feature matrix

-

Clustering support is available on SRIOV for client- and server-facing interfaces and not for the backplane.

-

Interface DOWN events are not recorded in Citrix ADC VPX instances.

-

For Static LA, traffic might still be sent on the interface whose physical status is DOWN.

-

For LACP, peer device knows interface DOWN event based on LACP timeout mechanism.

- Short timeout: 3 seconds

- Long timeout: 90 seconds

-

For LACP, interfaces should not be shared across VMs.

-

For Dynamic routing, convergence time depends on the Routing Protocol since link events are not detected.

-

Monitored static Route functionality fails if monitors are not bound to static routes since Route state depends on the VLAN status. The VLAN status depends on the link status.

-

Partial failure detection does not happen in high availability if there’s link failure. High availability-split brain condition might happen if there is link failure.

-

When any link event (disable/enable, reset) is generated from a VPX instance, the physical status of the link does not change. For static LA, any traffic initiated by the peer gets dropped on the instance.

-

For the VLAN tagging feature to work, do the following:

On the VMware ESX, set the port group’s VLAN ID to 1–4095 on the vSwitch of the VMware ESX server.

Table 8. Supported browsers

| Operating system | Browser and versions |

|---|---|

| Windows 7 | Internet Explorer- 8, 9, 10, and 11; Mozilla Firefox 3.6.25 and above; Google Chrome- 15 and above |

| Windows 64 bit | Internet Explorer - 8, 9; Google Chrome - 15 and above |

| MAC | Mozilla Firefox - 12 and above; Safari - 5.1.3; Google Chrome - 15 and above |

Usage guidelines

Follow these usage guidelines:

-

See the VMware ESXi CPU Considerations section in the document Performance Best Practices for VMware vSphere 6.5. Here’s an extract:

It is not recommended that virtual machines with high CPU/Memory demand sit on a Host/Cluster that is overcommitted. In most environments ESXi allows significant levels of CPU overcommitment (that is, running more vCPUs on a host than the total number of physical processor cores in that host) without impacting virtual machine performance. If an ESXi host becomes CPU saturated (that is, the virtual machines and other loads on the host demand all the CPU resources the host has), latency-sensitive workloads might not perform well. In this case you might want to reduce the CPU load, for example by powering off some virtual machines or migrating them to a different host (or allowing DRS to migrate them automatically).

-

Citrix recommends the latest hardware compatibility version to avail latest feature sets of the ESXi hypervisor for the virtual machine. For more information about the hardware and ESXi version compatibility, see VMware documentation.

-

The Citrix ADC VPX is a latency-sensitive, high-performance virtual appliance. To deliver its expected performance, the appliance requires vCPU reservation, memory reservation, vCPU pinning on the host. Also, hyper threading must be disabled on the host. If the host does not meet these requirements, issues such as high-availability failover, CPU spike within the VPX instance, sluggishness in accessing the VPX CLI, pitboss daemon crash, packet drops, and low throughput occur.

-

A hypervisor is considered over-provisioned if one of the following two conditions is met:

-

The total number of virtual cores (vCPU) provisioned on the host is greater than the total number of physical cores (pCPUs).

-

The total number of provisioned VMs consume more vCPUs than the total number of pCPUs.

At times, if an instance is over-provisioned, the hypervisor might not be able to guarantee the resources reserved (such as CPU, memory, and others) for the instance due to hypervisor scheduling over-heads or bugs or limitations with the hypervisor. This can cause lack of CPU resource for Citrix ADC and might lead to issues mentioned in the first point under Usage guidelines. As administrators, you’re recommended to reduce the tenancy on the host so that the total number of vCPUs provisioned on the host is lesser or equal to the total number of pCPUs.

Example For ESX hypervisor, if the

%RDY%parameter of a VPX vCPU is greater than 0 in theesxtopcommand output, the ESX host is said to be having scheduling overheads, which can cause latency related issues for the VPX instance.In such a situation, reduce the tenancy on the host so that

%RDY%returns to 0 always. Alternatively, contact the hypervisor vendor to triage the reason for not honoring the resource reservation done.

-

-

Hot adding is supported only for PV and SRIOV interfaces on Citrix ADC.

-

Hot removing either through the AWS Web console or AWS CLI is not supported for PV and SRIOV interfaces on Citrix ADC. The behavior of the instances can be unpredictable if hot-removal is attempted.

-

You can use two commands (

set ns vpxparamandshow ns vpxparam) to control packet engine(non-management) CPU usage behavior of VPX instances in hypervised and cloud environments:-

set ns vpxparam -cpuyield (YES | NO | DEFAULT)Allow each VM to use CPU resources that have been allocated to another VM but are not being used.Set ns vpxparam parameters:

-cpuyield: Release or do not release of allocated but unused CPU resources.

-

YES: Allow allocated but unused CPU resources to be used by another VM.

-

NO: Reserve all CPU resources for the VM to which they have been allocated. This option shows higher percentage in hypervisor and cloud environments for VPX CPU usage.

-

DEFAULT: No.

Note:

On all the Citrix ADC VPX platforms, the vCPU usage on the host system is 100 percent. Type the

set ns vpxparam –cpuyield YEScommand to override this usage.If you want to set the cluster nodes to “yield”, you must perform the following additional configurations on CCO:

- If a cluster is formed, all the nodes come up with “yield=DEFAULT”.

- If a cluster is formed using the nodes that are already set to “yield=YES”, then the nodes are added to cluster using “DEFAULT” yield.

Note:

If you want to set the cluster nodes to “yield=YES”, you can perform suitable configurations only after forming the cluster but not before the cluster is formed.

-

-

show ns vpxparamDisplay the current vpxparam settings.

-