Configure a NetScaler VPX instance to use Azure accelerated networking

Accelerated networking enables the single root I/O virtualization (SR-IOV) virtual function (VF) NIC to a virtual machine, which improves the networking performance. You can use this feature with heavy workloads that need to send or receive data at higher throughput with reliable streaming and lower CPU utilization. When a NIC is enabled with accelerated networking, Azure bundles the NIC’s existing para virtualized (PV) interface with an SR-IOV VF interface. The support of SR-IOV VF interface enables and enhances the throughput of the NetScaler VPX instance.

Accelerated networking provides the following benefits:

- Lower latency

- Higher packets per second (pps) performance

- Enhanced throughput

- Reduced jitter

- Decreased CPU utilization

Note:

Azure accelerated networking is supported on NetScaler VPX instances from release 13.0 build 76.29 onwards.

Prerequisites

- Ensure that your VM size matches the requirements for Azure accelerated networking.

- Stop VMs (individual or in an availability set) before enabling accelerated networking on any NIC.

Limitations

Accelerated networking can be enabled only on some instance types. For more information, see Supported instance types.

NICs supported for accelerated networking

Azure provides Mellanox ConnectX3, ConnectX4, and ConnectX5 NICs in the SR-IOV mode for accelerated networking.

When accelerated networking is enabled on a NetScaler VPX interface, Azure bundles either ConnectX3, ConnectX4, or ConnectX5 interface with the existing PV interface of a NetScaler VPX appliance.

Note:

NetScaler VPX supports ConnectX5 NICs from release 13.1 build 37.x onwards.

For more information about enabling accelerated networking before attaching an interface to a VM, see Create a network interface with accelerated networking.

For more information about enabling accelerated networking on an existing interface on a VM, see Enable existing interfaces on a VM.

How to enable accelerated networking on a NetScaler VPX instance using the Azure console

You can enable accelerated networking on a specific interface using the Azure console or the Azure PowerShell.

Do the following steps to enable accelerated networking by using Azure availability sets or availability zones.

-

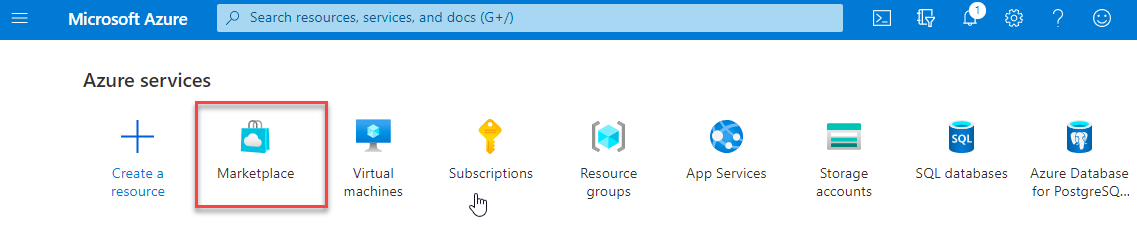

Log in to Azure portal, and navigate to Azure Marketplace.

-

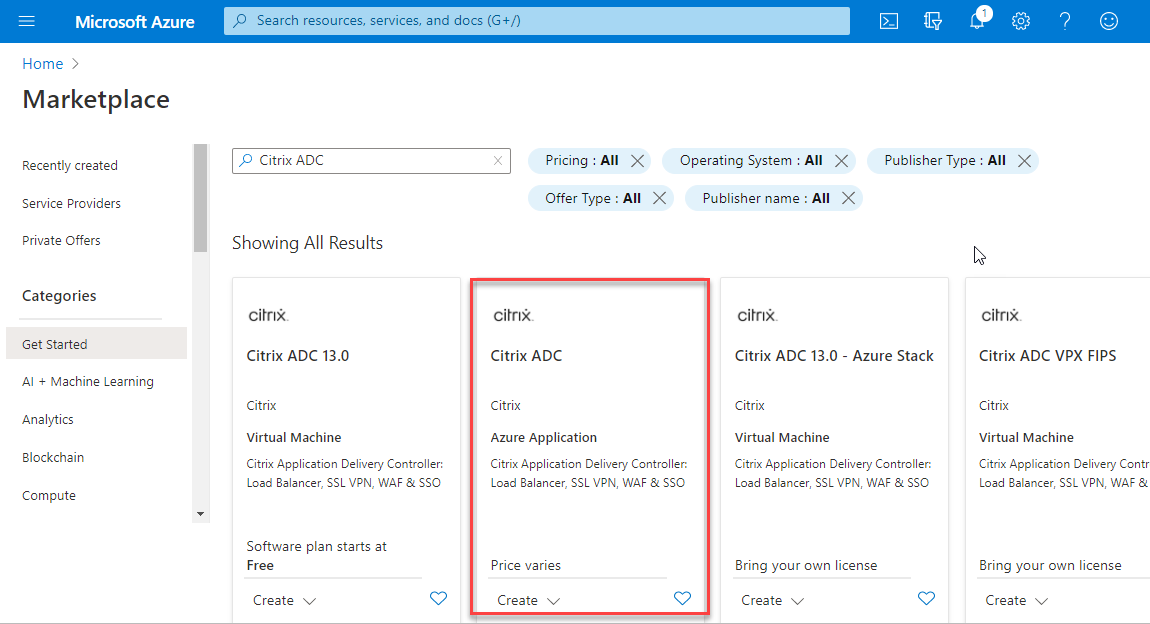

From the Azure Marketplace, search NetScaler.

-

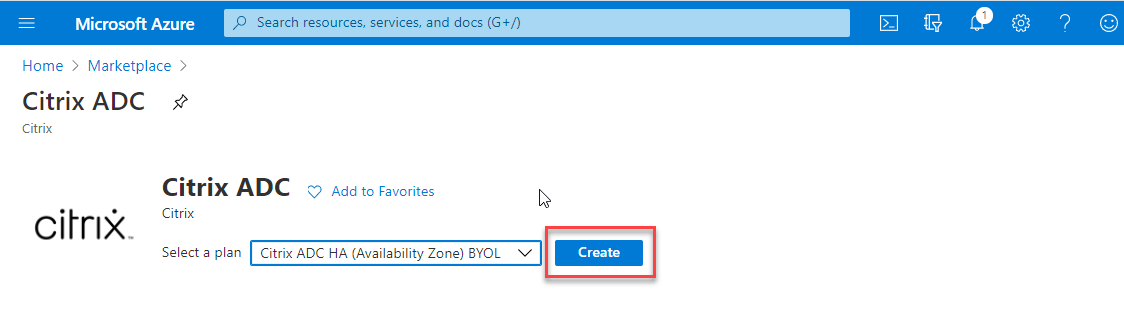

Select a non-FIPS NetScaler plan along with license, and click Create.

The Create NetScaler page appears.

-

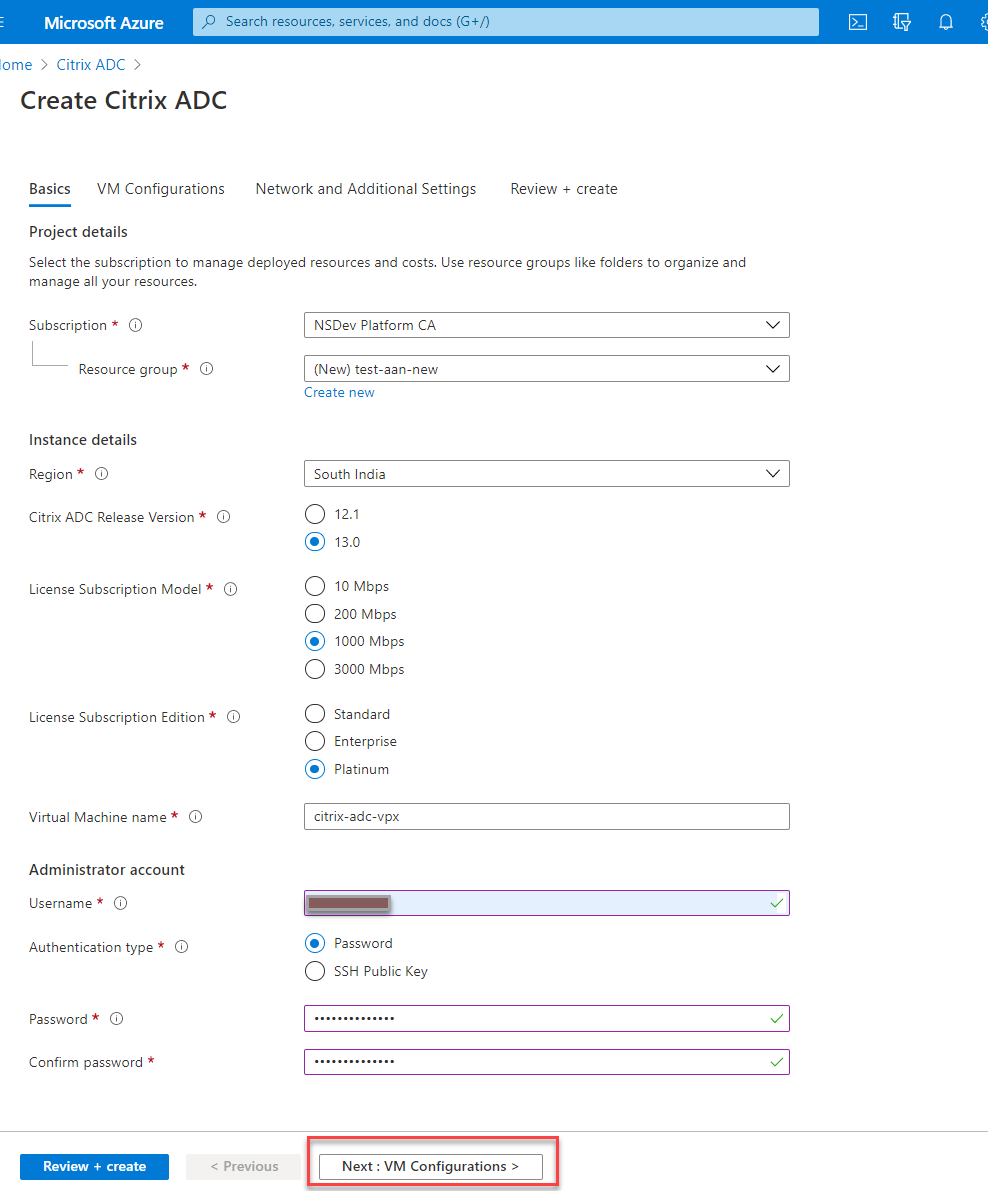

In the Basics tab, create a Resource Group. Under the Parameters tab, enter details for the Region, Admin user name, Admin Password, license type (VM SKU), and other fields.

-

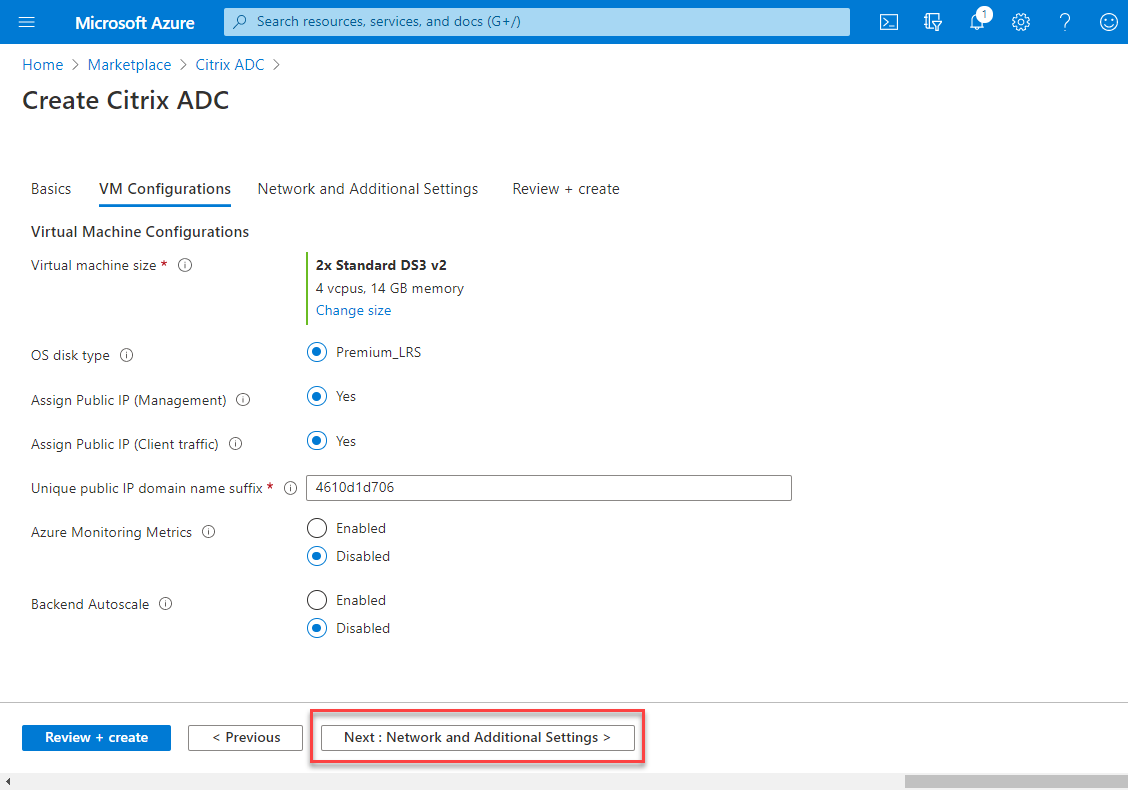

Click Next : VM Configurations >.

On the VM Configurations page, perform the following:

- Configure public IP domain name suffix.

- Enable or disable Azure Monitoring Metrics.

- Enable or disable Backend Autoscale™.

-

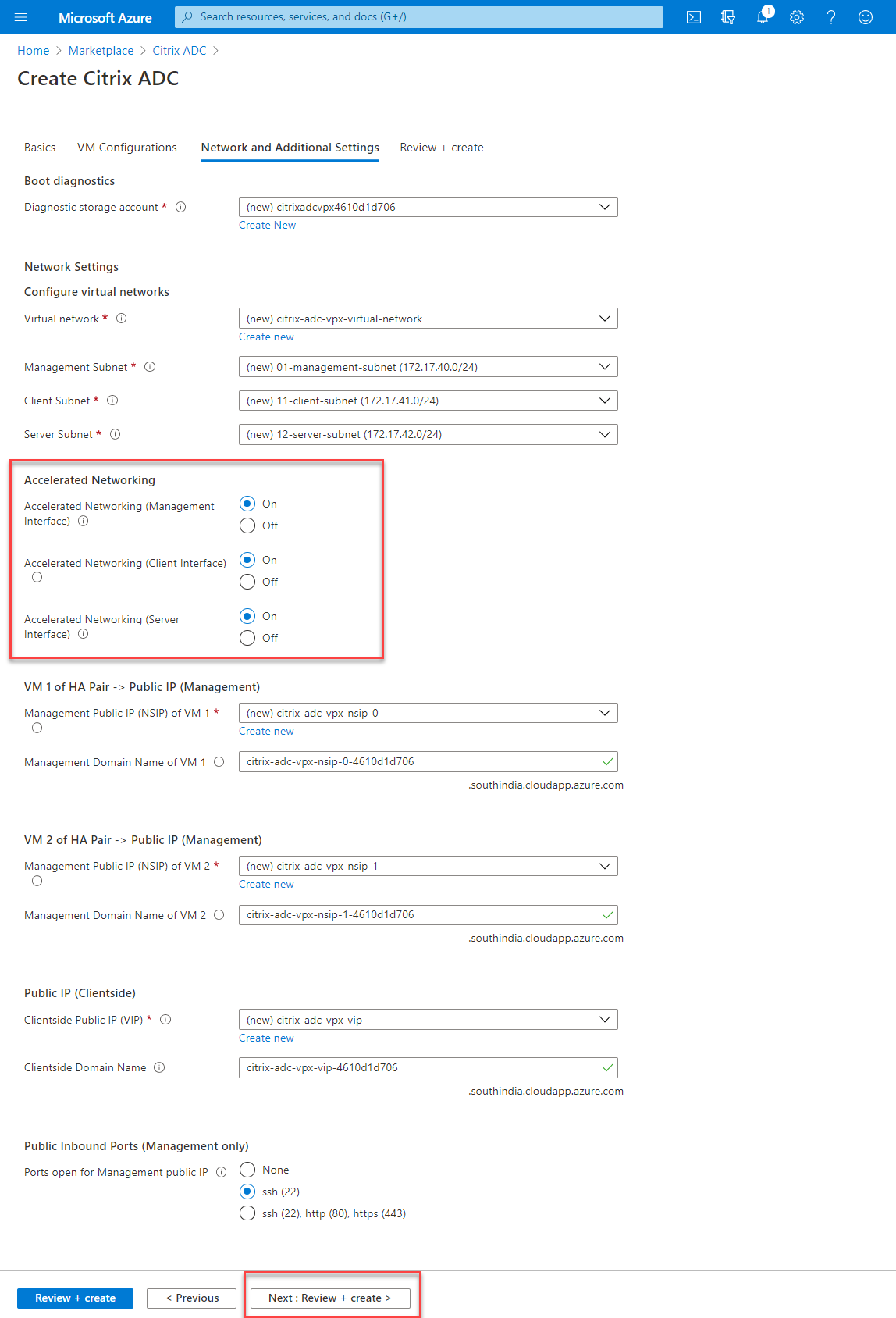

Click Next: Network and Additional settings >.

On the Network and Additional Settings page, create a Boot diagnostics account and configure the network settings.

Under the Accelerated Networking section, you have the option to enable or disable the accelerated networking separately for the Management interface, Client interface, and Server interface.

-

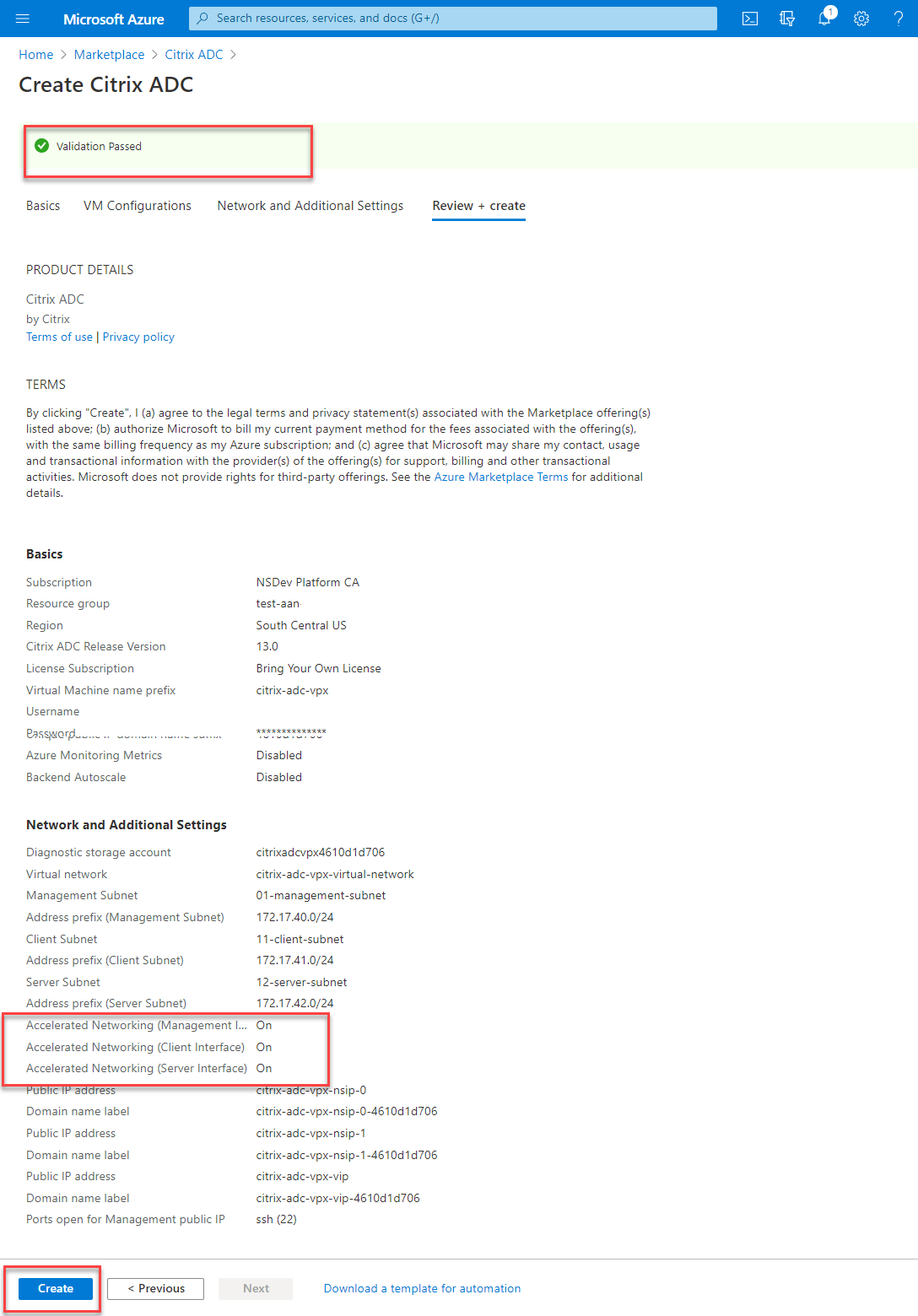

Click Next: Review + create >.

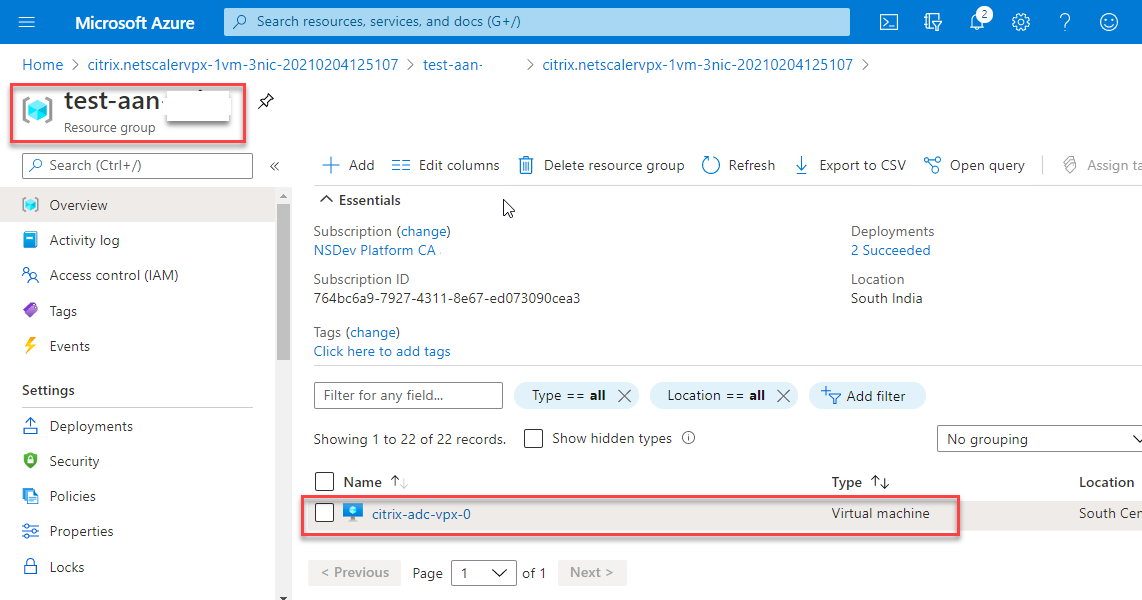

After the validation is successful, review the basic settings, VM configurations, network and additional settings, and click Create. It might take some time for the Azure Resource Group to be created with the required configurations.

-

After the deployment is complete, select the Resource Group to see the configuration details.

-

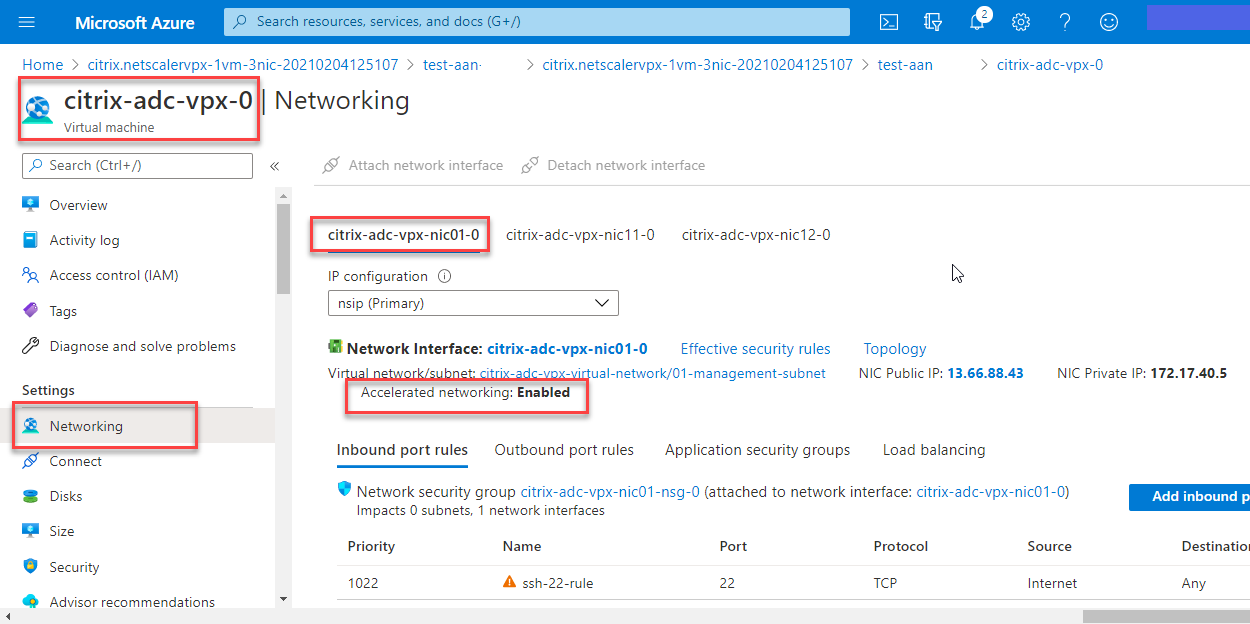

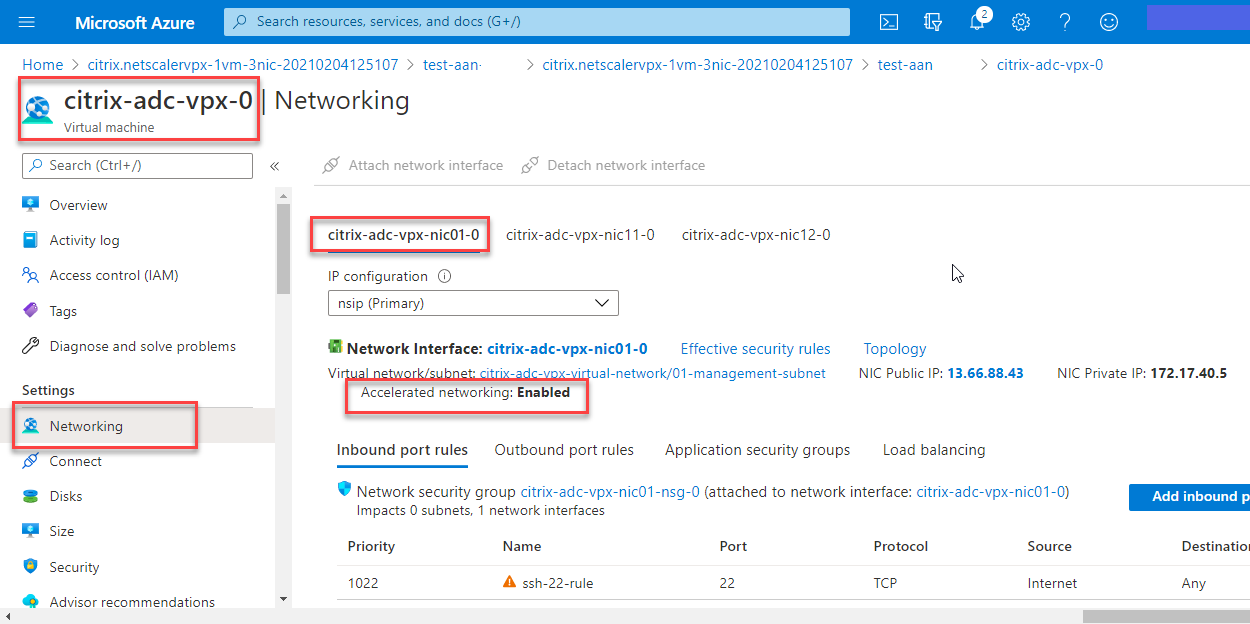

To verify the Accelerated Networking configurations, select Virtual machine > Networking. The Accelerated Networking status is displayed as Enabled or Disabled for each NIC.

Enable accelerated networking using Azure PowerShell

If you need to enable accelerated networking after the VM creation, you can do so using Azure PowerShell.

Note:

Ensure to stop the VM before you enable Accelerated Networking using Azure PowerShell.

Perform the following steps to enable accelerated networking by using Azure PowerShell.

-

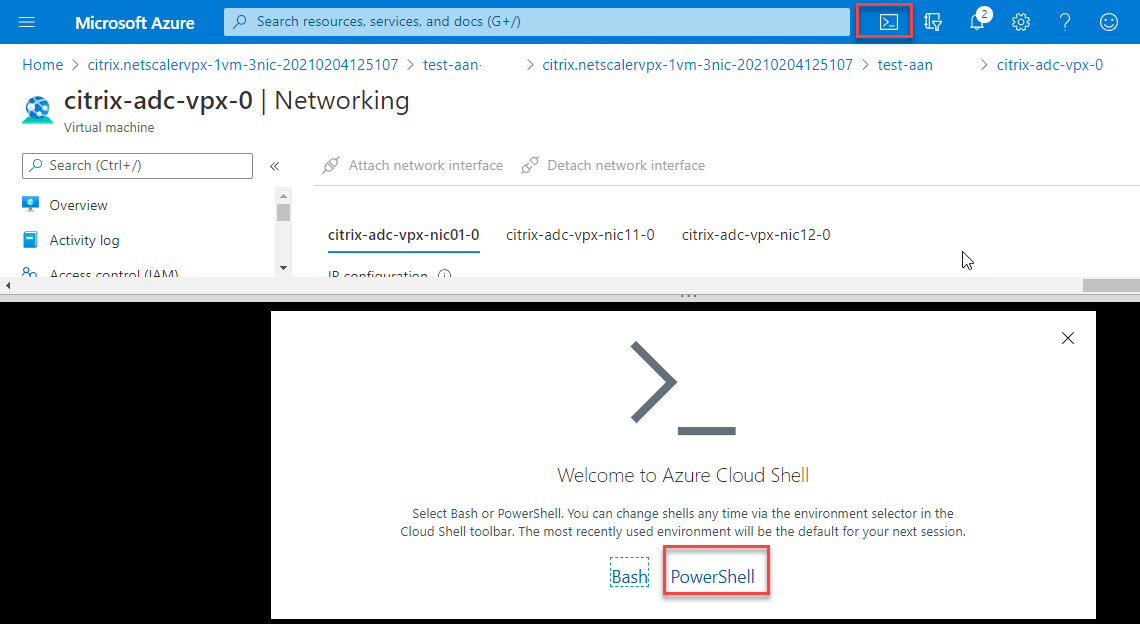

Navigate to Azure portal, click the PowerShell icon on the right-hand top corner.

Note:

If you are in the Bash mode, change to the PowerShell mode.

-

At the command prompt, run the following command:

az network nic update --name <nic-name> --accelerated-networking [true | false] --resource-group <resourcegroup-name> <!--NeedCopy-->The accelerated networking parameter accepts either of the following values:

- True: Enables accelerated networking on the specified NIC.

- False: Disables accelerated networking on the specified NIC.

To enable accelerated networking on a specific NIC:

az network nic update --name citrix-adc-vpx-nic01-0 --accelerated-networking true --resource-group rsgp1-aan <!--NeedCopy-->To disable accelerated networking on a specific NIC:

az network nic update --name citrix-adc-vpx-nic01-0 --accelerated-networking false --resource-group rsgp1-aan <!--NeedCopy--> -

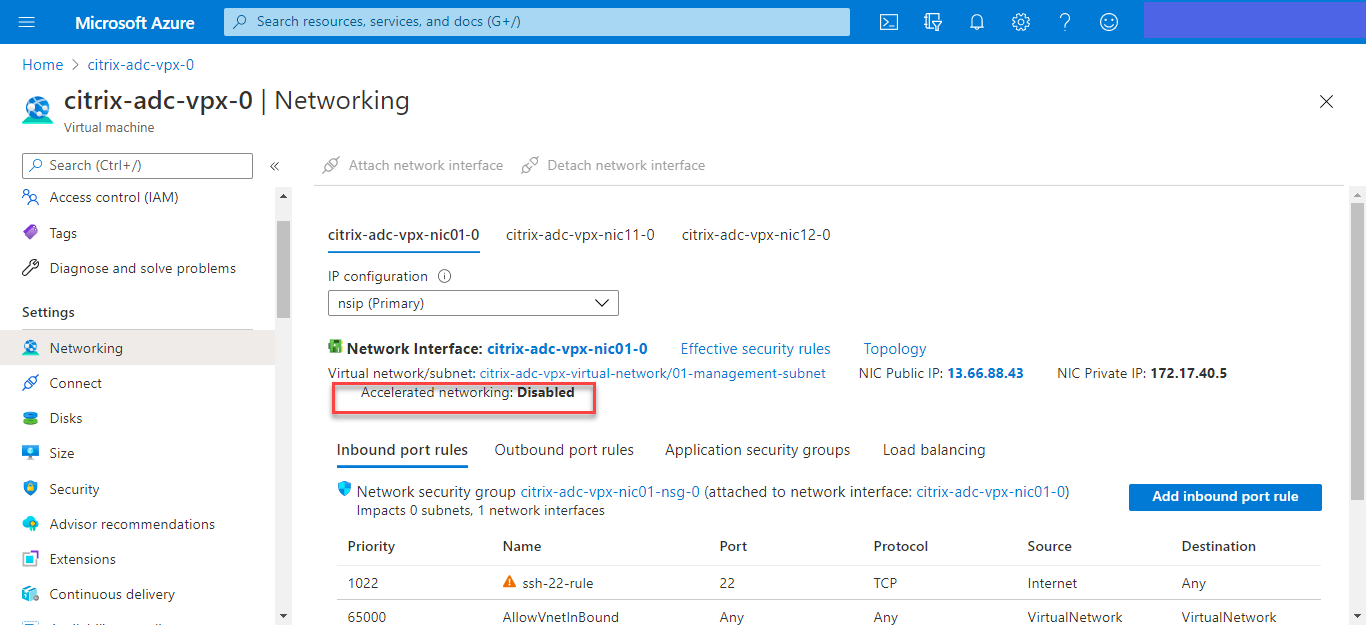

To verify that the Accelerated Networking status after the deployment is completed, Navigate to VM > Networking.

In the following example, you can see that Accelerated Networking is Enabled.

In the following example, you can see that Accelerated Networking is Disabled.

To verify accelerated networking on an interface by using FreeBSD Shell of NetScaler

You can log in to FreeBSD shell of NetScaler, and run the following commands to verify the accelerated networking status.

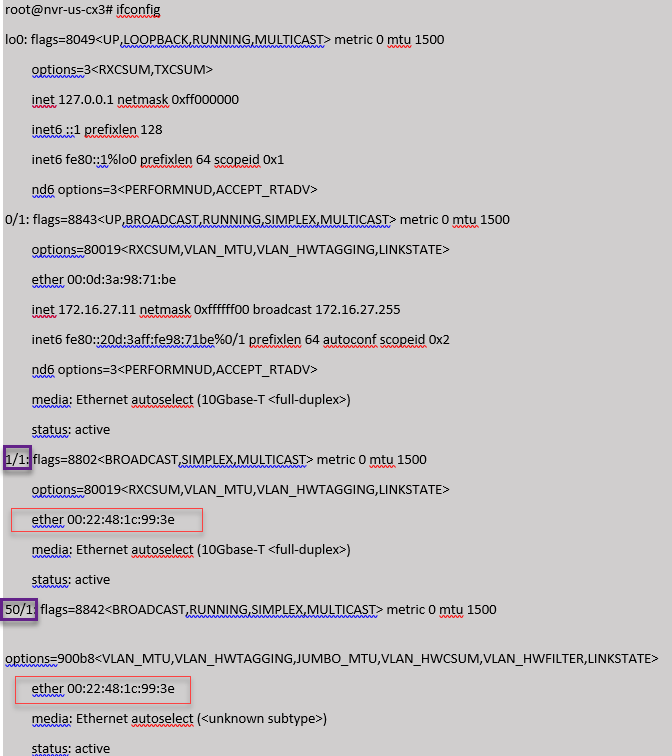

Example for ConnectX3 NIC:

The following example shows the “ifconfig” command output of the Mellanox ConnectX3 NIC. The “50/n” indicates the VF interfaces of the Mellanox ConnectX3 NICs. 0/1 and 1/1 indicates the PV interfaces of the NetScaler VPX instance. You can observe that both PV interface (1/1) and CX3 VF interface (50/1) have the same MAC addresses (00:22:48:1c:99:3e). This indicates that the two interfaces are bundled together.

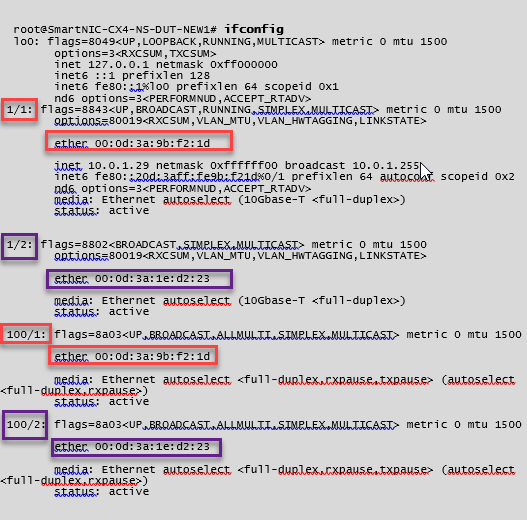

Example for ConnectX4 NIC:

The following example shows the “ifconfig” command output of the Mellanox ConnectX4 NIC. The “100/n” indicates the VF interfaces of the Mellanox ConnectX4 NICs. 0/1, 1/1, and 1/2 indicates the PV interfaces of NetScaler VPX instance. You can observe that both PV interface (1/1) and CX4 VF interface (100/1) have the same MAC addresses (00:0d:3a:9b:f2:1d). This indicates that the two interfaces are bundled together. Similarly, the PV interface (1/2) and CX4 VF interface (100/2) have the same MAC addresses (00:0d:3a:1e:d2:23).

To verify accelerated networking on an interface by using ADC CLI

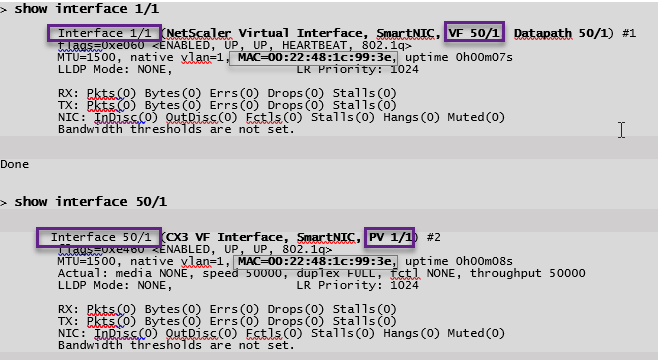

Example for ConnectX3 NIC:

The following show interface command output indicates that the PV interface 1/1 is bundled with virtual function 50/1, which is an SR-IOV VF NIC. The MAC addresses of both 1/1 and 50/1 NICs are the same. After accelerated networking is enabled, the data of the 1/1 interface is sent through datapath of the 50/1 interface, which is a ConnectX3 interface. You can see that the “show interface” output of the PV interface (1/1) points to the VF (50/1). Similarly, the “show interface” output of VF interface (50/1) points to the PV interface (1/1).

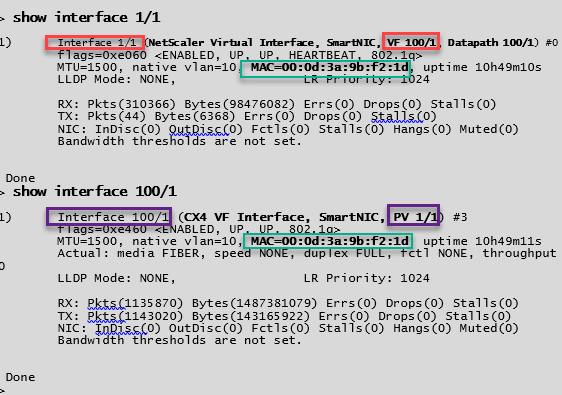

Example for ConnectX4 NIC:

The following show interface command output indicates that the PV interface 1/1 is bundled with virtual function 100/1, which is an SR-IOV VF NIC. The MAC addresses of both 1/1 and 100/1 NICs are the same. After accelerated networking is enabled, the data of 1/1 interface is sent through the data path of 100/1 interface, which is a ConnectX4 interface. You can see that the “show interface” output of PV interface (1/1) points to the VF (100/1). Similarly, the “show interface” output of VF interface (100/1) points to the PV interface (1/1).

Points to note in NetScaler

- PV interface is considered as the primary or main interface for all the necessary operations. Configurations must be performed on PV interfaces only.

- All the ‘set’ operations on a VF interface are blocked except the following:

- enable interface

- disable interface

- reset interface

- clear stats

Note:

Citrix® recommends that you do not perform any operations on the VF interface.

- You can verify the binding of PV interface with VF interface using the

show interfacecommand. - From NetScaler release 13.1-33.x, a NetScaler VPX instance can seamlessly handle dynamic NIC removals and reattachment of the removed NICs in Azure accelerated networking. Azure can remove SR-IOV VF NIC of accelerated networking for their host maintenance activities. Whenever a NIC is removed from Azure VM, the NetScaler VPX instance shows the interface status as “Link Down” and the traffic goes through the virtual interface only. After the removed NIC is reattached, the VPX instances use the reattached SR-IOV VF NIC. This process happens seamlessly and does not require any configuration.

Configure a VLAN to a PV interface

When a PV interface is bound to a VLAN, the associated accelerated VF interface is also bound to the same VLAN as the PV interface. In this example, the PV interface (1/1) is bound to VLAN (20). The VF interface (100/1) that is bundled with the PV interface (1/1) is also bound to VLAN 20.

Example:

-

Create a VLAN.

add vlan 20 <!--NeedCopy--> -

Bind a VLAN to the PV interface.

bind vlan 20 –ifnum 1/1 show vlan 1) VLAN ID: 1 Link-local IPv6 addr: fe80::20d:3aff:fe9b:f21d/64 Interfaces : LO/1 2) VLAN ID: 10 VLAN Alias Name: Interfaces : 0/1 100/1 IPs : 10.0.1.29 Mask: 255.255.255.0 3) VLAN ID: 20 VLAN Alias Name: Interfaces : 1/1 100/2 <!--NeedCopy-->

Note:

VLAN binding operation is not permitted on an accelerated VF interface.

bind vlan 1 -ifnum 100/1

ERROR: Operation not permitted

<!--NeedCopy-->

In this article

- Prerequisites

- Limitations

- NICs supported for accelerated networking

- How to enable accelerated networking on a NetScaler VPX instance using the Azure console

- Enable accelerated networking using Azure PowerShell

- To verify accelerated networking on an interface by using FreeBSD Shell of NetScaler

- To verify accelerated networking on an interface by using ADC CLI

- Points to note in NetScaler

- Configure a VLAN to a PV interface