-

Configuration guide for Citrix Virtual Apps and Desktops™ workloads

-

Citrix SD-WAN Orchestrator™ on-premises configuration on Citrix SD-WAN appliance

-

-

This content has been machine translated dynamically.

Dieser Inhalt ist eine maschinelle Übersetzung, die dynamisch erstellt wurde. (Haftungsausschluss)

Cet article a été traduit automatiquement de manière dynamique. (Clause de non responsabilité)

Este artículo lo ha traducido una máquina de forma dinámica. (Aviso legal)

此内容已经过机器动态翻译。 放弃

このコンテンツは動的に機械翻訳されています。免責事項

이 콘텐츠는 동적으로 기계 번역되었습니다. 책임 부인

Este texto foi traduzido automaticamente. (Aviso legal)

Questo contenuto è stato tradotto dinamicamente con traduzione automatica.(Esclusione di responsabilità))

This article has been machine translated.

Dieser Artikel wurde maschinell übersetzt. (Haftungsausschluss)

Ce article a été traduit automatiquement. (Clause de non responsabilité)

Este artículo ha sido traducido automáticamente. (Aviso legal)

この記事は機械翻訳されています.免責事項

이 기사는 기계 번역되었습니다.책임 부인

Este artigo foi traduzido automaticamente.(Aviso legal)

这篇文章已经过机器翻译.放弃

Questo articolo è stato tradotto automaticamente.(Esclusione di responsabilità))

Translation failed!

QoS fairness (RED)

The QoS fairness feature improves the fairness of multiple virtual path flows by using QoS classes and Random Early Detection (RED). A virtual path can be assigned to one of 16 different classes. A class can be one of three basic types:

- Realtime classes serve traffic flows that demand prompt service up to a certain bandwidth limit. Low latency is preferred over aggregate throughput.

- Interactive classes have lower priority than realtime but have absolute priority over bulk traffic.

- Bulk classes get what is left over from realtime and interactive classes, because latency is less important for bulk traffic.

Users specify different bandwidth requirements for different classes, which enable the virtual path scheduler to arbitrate competing bandwidth requests from multiple classes of the same type. The scheduler uses the Hierarchical Fair Service Curve (HFSC) algorithm to achieve fairness among the classes.

HFSC services classes in first-in, first-out (FIFO) order. Before scheduling packets, Citrix SD-WAN™ examines the amount of traffic pending for the packets class. When excessive traffic is pending, the packets are dropped instead of being put into the queue (tail dropping).

Why does TCP cause queuing?

TCP cannot control how quickly the network can transmit data. To control bandwidth, TCP implements the concept of a bandwidth window, which is the amount of unacknowledged traffic that it allows in the network. It initially starts with a small window and doubles the size of that window whenever acknowledgments are received. This is called the slow start or exponential growth phase.

TCP identifies network congestion by detecting dropped packets. If the TCP stack sends a burst of packets that introduce a 250 ms delay, TCP does not detect congestion if none of the packets are discarded, so it continues to increase the size of the window. It might continue to do so until the wait time reaches 600–800 ms.

When TCP is not in the slow start mode, it reduces the bandwidth by half when packet loss is detected, and increases the allowed bandwidth by one packet for each acknowledgment received. TCP therefore alternates between putting upward pressure on the bandwidth and backing off. Unfortunately, if the wait time reaches 800 ms by the time packet loss is detected, the bandwidth reduction causes a transmission delay.

Impact on QoS fairness

When TCP transmission delay occurs, providing any kind of fairness guarantee within a virtual-path class is difficult. The virtual path scheduler must apply tail-drop behavior to avoid holding enormous amounts of traffic. The nature of TCP connections is such that a small number of traffic flows to fill the virtual path, making it difficult for a new TCP connection to achieve a fair share of the bandwidth. Sharing bandwidth fairly requires making sure that bandwidth is available for new packets to be transmitted.

Random Early Detection

Random Early Detection (RED) prevents traffic queues from filling up and causing tail-drop actions. It prevents needless queuing by the virtual path scheduler, without affecting the throughput that a TCP connection can achieve.

How to use RED

-

Start a TCP session to create the virtual path. Verify that with RED enabled, the wait time on that class stays at around 50 ms in the steady state.

-

Start a second TCP session and verify that both the TCP sessions share the virtual path bandwidth evenly. Verify that the wait time on the class stays at the steady state.

-

Verify that the Configuration Editor can be used to enable and disable RED and that it displays the correct value for the parameter.

-

Verify that the View Configuration in the SD-WAN GUI page displays whether RED is enabled for a rule.

How to enable RED

-

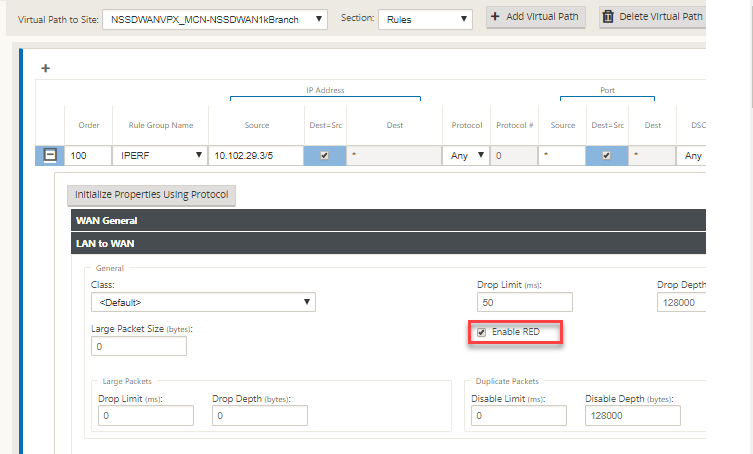

Navigate to Configuration editor > Connections > Virtual Paths > [Select Virtual Path] > Rules > Select Rule, for example; (VOIP).

-

Expand the LAN to WAN pane. Under LAN to WAN section, click the Enable RED checkbox to enable it for TCP based rules.

Share

Share

This Preview product documentation is Cloud Software Group Confidential.

You agree to hold this documentation confidential pursuant to the terms of your Cloud Software Group Beta/Tech Preview Agreement.

The development, release and timing of any features or functionality described in the Preview documentation remains at our sole discretion and are subject to change without notice or consultation.

The documentation is for informational purposes only and is not a commitment, promise or legal obligation to deliver any material, code or functionality and should not be relied upon in making Cloud Software Group product purchase decisions.

If you do not agree, select I DO NOT AGREE to exit.