Deploy the NetScaler Ingress Controller for NetScaler with admin partitions

NetScaler Ingress Controller is used to automatically configure one or more NetScaler based on the Ingress resource configuration. The ingress NetScaler appliance (MPX or VPX) can be partitioned into logical entities called admin partitions, where each partition can be configured and used as a separate NetScaler appliance. For more information, see Admin Partition. NetScaler Ingress Controller can also be deployed to configure NetScaler with admin partitions.

For NetScaler with admin partitions, you must deploy a single instance of NetScaler Ingress Controller for each partition. And, the partition must be associated with a partition user specific to the NetScaler Ingress Controller instance.

Note:

NetScaler Metrics Exporter supports exporting metrics from the admin partitions of NetScaler.

Prerequisites

Ensure that:

- Admin partitions are configured on the NetScaler appliance. For instructions see, Configure admin partitions.

-

Create a partition user specifically for the NetScaler Ingress Controller. NetScaler Ingress Controller configures the NetScaler using this partition user account. Ensure that you do not associate this partition user to other partitions in the NetScaler appliance.

Note:

For SSL-related use cases in the admin partition, ensure that you use NetScaler version 12.0–56.8 and above.

To deploy the NetScaler Ingress Controller for NetScaler with admin partitions

-

Download the citrix-k8s-ingress-controller.yaml using the following command:

wget https://raw.githubusercontent.com/citrix/citrix-k8s-ingress-controller/master/deployment/baremetal/citrix-k8s-ingress-controller.yaml -

Edit the citrix-k8s-ingress-controller.yaml file and enter the values for the following environmental variables:

Environment Variable Mandatory or Optional Description NS_IP Mandatory The IP address of the NetScaler appliance. For more details, see Prerequisites. NS_USER and NS_PASSWORD Mandatory The user name and password of the partition user that you have created for the NetScaler Ingress Controller. For more details, see Prerequisites. NS_VIP Mandatory NetScaler Ingress Controller uses the IP address provided in this environment variable to configure a virtual IP address to the NetScaler that receives the Ingress traffic. Note: NS_VIP acts as a fallback when the frontend-ip annotation is not provided in Ingress YAML. Only Supported for Ingress. NS_SNIPS Optional Specifies the SNIP addresses on the NetScaler appliance or the SNIP addresses on a specific admin partition on the NetScaler appliance. NS_ENABLE_MONITORING Mandatory Set the value Yesto monitor NetScaler. Note: Ensure that you disable NetScaler monitoring for NetScaler with admin partitions. Set the value toNo.EULA Mandatory The End User License Agreement. Specify the value as Yes.Kubernetes_url Optional The kube-apiserver url that NetScaler Ingress Controller uses to register the events. If the value is not specified, NetScaler Ingress Controller uses the internal kube-apiserver IP address. LOGLEVEL Optional The log levels to control the logs generated by NetScaler Ingress Controller. By default, the value is set to DEBUG. The supported values are: CRITICAL, ERROR, WARNING, INFO, and DEBUG. For more information, see Log Levels NS_PROTOCOL and NS_PORT Optional Defines the protocol and port that must be used by the NetScaler Ingress Controller to communicate with NetScaler. By default, the NetScaler Ingress Controller uses HTTPS on port 443. You can also use HTTP on port 80. ingress-classes Optional If multiple ingress load balancers are used to load balance different ingress resources. You can use this environment variable to specify the NetScaler Ingress Controller to configure NetScaler associated with a specific ingress class. For information on Ingress classes, see Ingress class support -

Once you update the environment variables, save the YAML file and deploy it using the following command:

kubectl create -f citrix-k8s-ingress-controller.yaml -

Verify if the NetScaler Ingress Controller is deployed successfully using the following command:

kubectl get pods --all-namespaces

Use case: How to securely deliver multitenant microservice-based applications using NetScaler admin partitions

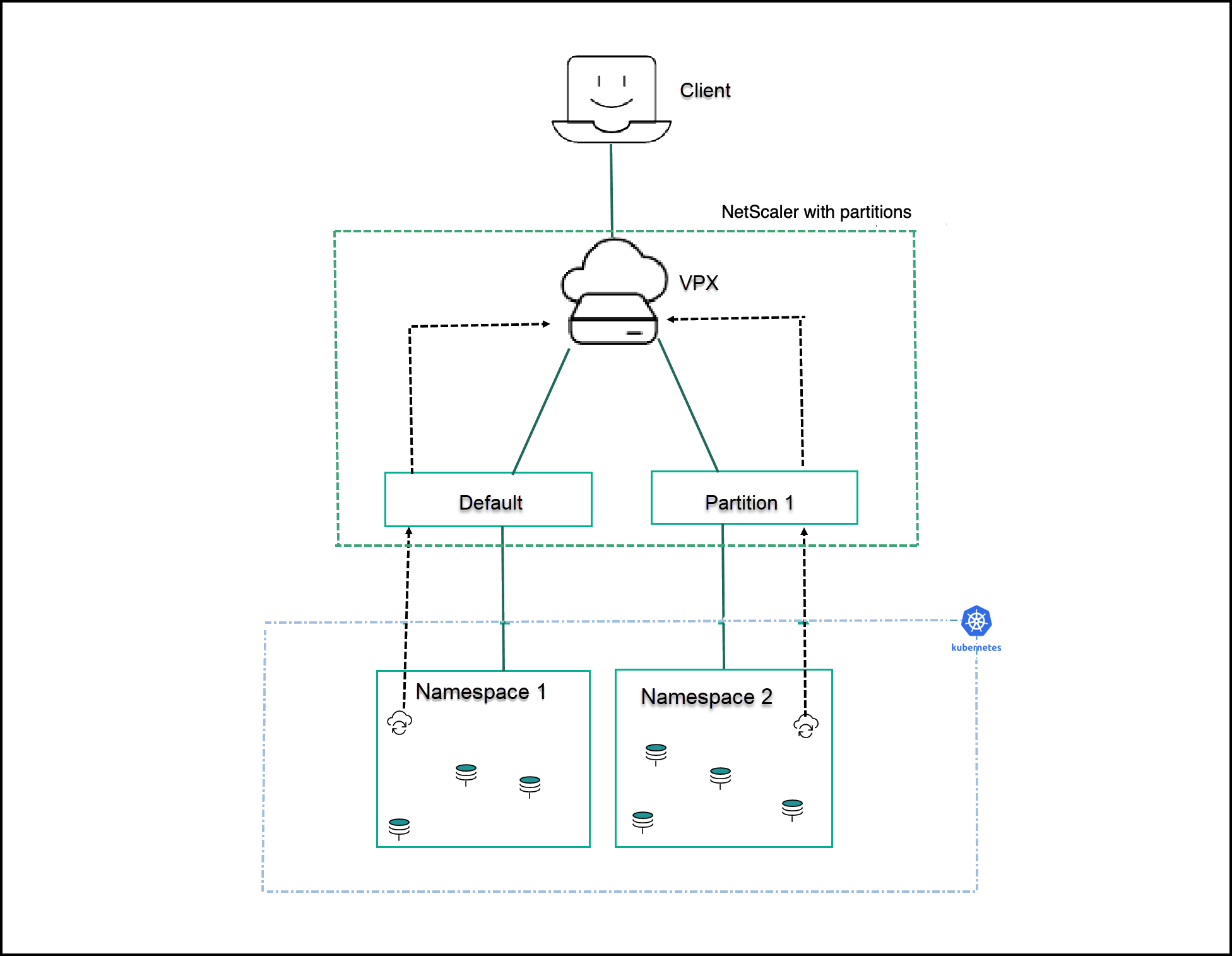

You can isolate ingress traffic between different microservice based applications with the NetScaler admin partition using NetScaler Ingress Controller. NetScaler admin partition enables multitenancy at the software level in a single NetScaler instance. Each partition has its own control plane and network plane.

You can deploy one instance of NetScaler Ingress Controller in each namespace in a cluster.

For example, imagine you have two namespaces in a Kubernetes cluster and you want to isolate these namespaces from each other under two different admins. You can use the admin partition feature to separate these two namespaces. Create namespace 1 and namespace 2 and deploy NetScaler Ingress Controller separately in both of these namespaces.

NetScaler Ingress Controller instances provide configuration instructions to the respective NetScaler partitions using the system user account specified in the YAML manifest.

In this example, apache and guestbook sample applications are deployed in two different namespaces (namespace 1 and namespace 2 respectively) in a Kubernetes cluster. Both apache and guestbook application teams want to manage their workload independently and do not want to share resources. NetScaler admin partition helps to achieve multitenancy and in this example, two partitions (default, partition1) are used to manage both application workload separately.

The following prerequisites apply:

-

Ensure that you have configured admin partitions on the NetScaler appliance. For instructions see, Configure admin partitions.

-

Ensure that you create a partition user account specifically for the NetScaler Ingress Controller. NetScaler Ingress Controller configures the NetScaler using this partition user account. Ensure that you do not associate this partition user to other partitions in the NetScaler appliance.

Example

The following example scenario shows how to deploy different applications within different namespaces in a Kubernetes cluster and how the request can be isolated from ADC using the admin partition.

In this example, two sample applications are deployed in two different namespaces in a Kubernetes cluster. In this example, it is used a default partition in NetScaler for the apache application and the admin partition p1 for the guestbook application.

Create namespaces

Create two namespaces ns1 and ns2 using the following commands:

kubectl create namespace ns1

kubectl create namespace ns2

Configurations in namespace ns1

-

Deploy the

apacheapplication inns1.apiVersion: v1 kind: Namespace metadata: name: ns1 --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: apache-ns1 name: apache-ns1 namespace: ns1 spec: replicas: 2 selector: matchLabels: app: apache-ns1 template: metadata: labels: app: apache-ns1 spec: containers: - image: httpd name: httpd --- apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: apache-ns1 name: apache-ns1 namespace: ns1 spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: apache-ns1 -

Deploy NetScaler Ingress Controller in

ns1.You can use the YAML file to deploy NetScaler Ingress Controller or use the Helm chart.

Ensure that you use the user credentials that are bound to the default partition.

helm install cic-def-part-ns1 citrix/citrix-ingress-controller --set nsIP=<nsIP of ADC>,license.accept=yes,adcCredentialSecret=nslogin,ingressClass[0]=citrix-def-part-ns1 --namespace ns1 -

Deploy the Ingress resource.

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: ingress-apache-ns1 namespace: ns1 annotations: ingress.citrix.com/frontend-ip: "< ADC VIP IP >" spec: ingressClassName: "citrix-def-part-ns1" rules: - host: apache-ns1.com http: paths: - backend: service: name: apache-ns1 port: number: 80 pathType: Prefix path: /index.html -

NetScaler Ingress Controller in

ns1configures the ADC entities in the default partition.

Configurations in namespace ns2

-

Deploy

guestbookapplication inns2.apiVersion: v1 kind: Namespace metadata: name: ns2 --- apiVersion: v1 kind: Service metadata: name: redis-master namespace: ns2 labels: app: redis tier: backend role: master spec: ports: - port: 6379 targetPort: 6379 selector: app: redis tier: backend role: master --- apiVersion: apps/v1 # for k8s versions before 1.9.0 use apps/v1beta2 and before 1.8.0 use extensions/v1beta1 kind: Deployment metadata: name: redis-master namespace: ns2 spec: selector: matchLabels: app: redis role: master tier: backend replicas: 1 template: metadata: labels: app: redis role: master tier: backend spec: containers: - name: master image: k8s.gcr.io/redis:e2e # or just image: redis resources: requests: cpu: 100m memory: 100Mi ports: - containerPort: 6379 --- apiVersion: v1 kind: Service metadata: name: redis-slave namespace: ns2 labels: app: redis tier: backend role: slave spec: ports: - port: 6379 selector: app: redis tier: backend role: slave --- apiVersion: apps/v1 # for k8s versions before 1.9.0 use apps/v1beta2 and before 1.8.0 use extensions/v1beta1 kind: Deployment metadata: name: redis-slave namespace: ns2 spec: selector: matchLabels: app: redis role: slave tier: backend replicas: 2 template: metadata: labels: app: redis role: slave tier: backend spec: containers: - name: slave image: gcr.io/google_samples/gb-redisslave:v1 resources: requests: cpu: 100m memory: 100Mi env: - name: GET_HOSTS_FROM value: dns # If your cluster config does not include a dns service, then to # instead access an environment variable to find the master # service's host, comment out the 'value: dns' line above, and # uncomment the line below: # value: env ports: - containerPort: 6379 --- apiVersion: v1 kind: Service metadata: name: frontend namespace: ns2 labels: app: guestbook tier: frontend spec: # if your cluster supports it, uncomment the following to automatically create # an external load-balanced IP for the frontend service. # type: LoadBalancer ports: - port: 80 selector: app: guestbook tier: frontend --- apiVersion: apps/v1 # for k8s versions before 1.9.0 use apps/v1beta2 and before 1.8.0 use extensions/v1beta1 kind: Deployment metadata: name: frontend namespace: ns2 spec: selector: matchLabels: app: guestbook tier: frontend replicas: 3 template: metadata: labels: app: guestbook tier: frontend spec: containers: - name: php-redis image: gcr.io/google-samples/gb-frontend:v4 resources: requests: cpu: 100m memory: 100Mi env: - name: GET_HOSTS_FROM value: dns # If your cluster config does not include a dns service, then to # instead access environment variables to find service host # info, comment out the 'value: dns' line above, and uncomment the # line below: # value: env ports: - containerPort: 80 -

Deploy NetScaler Ingress Controller in namespace

ns2.Ensure that you use the user credentials that are bound to the partition

p1.helm install cic-adm-part-p1 citrix/citrix-ingress-controller --set nsIP=<nsIP of ADC>,nsSNIPS='[<SNIPs in partition p1>]',license.accept=yes,adcCredentialSecret=admin-part-user-p1,ingressClass[0]=citrix-adm-part-ns2 --namespace ns2 -

Deploy ingress for the

guestbookapplication.apiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: ingress.citrix.com/frontend-ip: "<VIP in partition 1>" name: guestbook-ingress namespace: ns2 spec: ingressClassName: citrix-adm-part-ns2 rules: - host: www.guestbook.com http: paths: - backend: service: name: frontend port: number: 80 path: / pathType: Prefix -

NetScaler Ingress Controller in

ns2configures the ADC entities in partitionp1.