Policy-based routing for multiple Kubernetes clusters

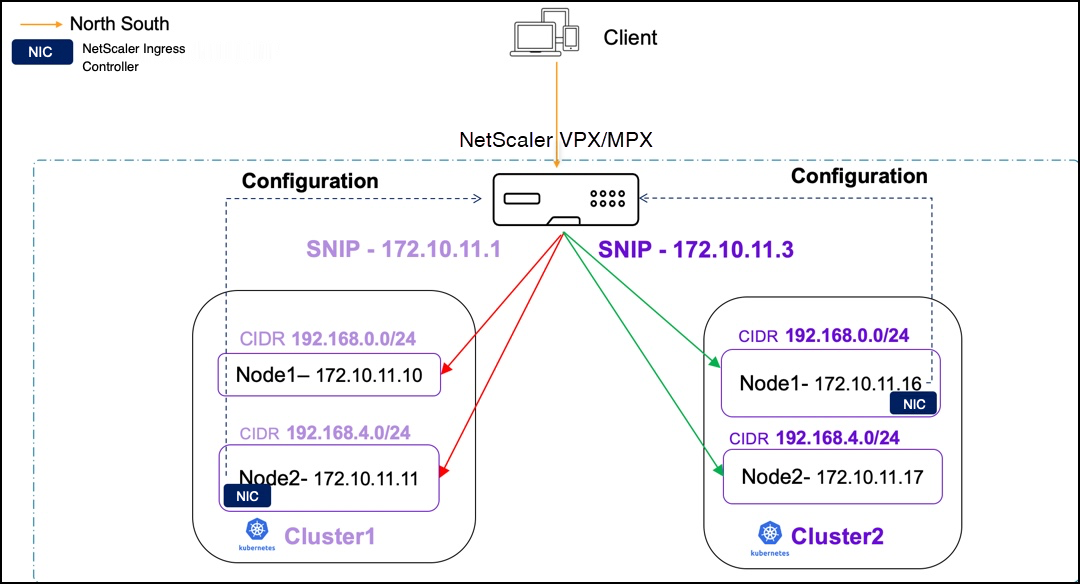

When using a single NetScaler to load balance multiple Kubernetes clusters, NetScaler Ingress Controller adds pod CIDR networks in NetScaler through static routes. These routes establish network connectivity between Kubernetes pods and NetScaler. However, when the pod CIDRs overlap there may be route conflicts. NetScaler supports policy-based routing (PBR) to address the networking conflicts in such scenarios. In PBR, routing decisions are taken based on the criteria that you specify. Typically, a next hop is specified where NetScaler VPX or NetScaler MPX sends the selected packets. In a GSLB Kubernetes environment, PBR is implemented by reserving a subnet IP address (SNIP) for each Kubernetes cluster or the NetScaler Ingress Controller. Using net profile, the SNIP is bound to all service groups created by the same NetScaler Ingress Controller. For all the traffic generated from service groups belonging to the same cluster, the source IP address is the same SNIP.

In the following sample topology, PBR is configured for two Kubernetes clusters, which are load balanced using NetScaler MPX or NetScaler VPX.

Configure PBR using NetScaler Ingress Controller

To configure PBR, you need one SNIP or more per Kubernetes cluster. You can provide SNIP values using the nsSNIPS parameter in the NSIC Helm install command.

Use the following values.yml file in the helm install command to deploy NetScaler Ingress Controller and configure PBR.

nsIP: <NS_IP>

license:

accept: yes

adcCredentialSecret: <secret>

nodeWatch: True

clusterName: <name of cluster>

nsSNIPS: '["1.2.3.4", "5.6.7.8"]'

<!--NeedCopy-->

Validate PBR configuration on NetScaler after deploying NetScaler Ingress Controller

This validation example uses a two-node Kubernetes cluster with NetScaler Ingress Controller deployed along with the following ConfigMap with two SNIPs.

You can verify that NetScaler Ingress Controller adds the following configurations to NetScaler:

-

To verify whether the IPset of all NS_SNIPs is added, run the following command:

show ipset k8s-pbr_ipset.

-

A net profile is added with the

SrcIPset to theIPset.

-

To verify whether the service group added by NetScaler Ingress Controller contains the net profile set, run

show servicegroup.

-

Run the following command to check whether the PBR is addded:

shpbr.

Here:

- The number of PBRs is equivalent to (number of SNIPs)

*(number of Kubernetes nodes). In this case, it adds four (2*2) PBRs. - The

srcIPof the PBR is theNS_SNIPSprovided to NetScaler Ingress Controller by ConfigMap. ThedestIPis the CNI overlay subnet range of the Kubernetes node. -

NextHopis the IP address of the Kubernetes node.

- The number of PBRs is equivalent to (number of SNIPs)

-

You can also use the logs of the NetScaler Ingress Controller to validate the configuration.

Configure PBR using the NetScaler node controller

You can configure PBR using the NetScaler node controller for multiple Kubernetes clusters. When you are using a single NetScaler to load balance multiple Kubernetes clusters with NetScaler node controller for networking, the static routes added by it to forward packets to the IP address of the VXLAN tunnel interface might cause route conflicts. To support PBR, NetScaler node controller needs to work in conjunction with the NetScaler Ingress Controller to bind the net profile to the service group.

Perform the following steps to configure PBR using the NetScaler node controller:

-

While starting NetScaler node controller, provide the

CLUSTER_NAMEas an environment variable. Specifying this variable indicates that it is a multi-cluster deployment and the NetScaler node controller configures PBR instead of static routes.Example:

helm install nsnc netscaler/netscaler-node-controller --set license.accept=yes,nsIP=<NSIP>,vtepIP=<NetScaler SNIP>,vxlan.id=<VXLAN ID>,vxlan.port=<VXLAN PORT>,network=<IP-address-range-for-VTEP-overlay>,adcCredentialSecret=<Secret-for-NetScaler-credentials>,cniType=<CNI-overlay-name>,clusterName=<cluster-name> -

While deploying NetScaler Ingress Controller, provide the

CLUSTER_NAMEas an environment variable. This value should be the same as the value provided in the node controller, and enable PBR using the argumentnsncPbr=Trueto configure PBR on NetScaler.

Notes:

- The value provided for

CLUSTER_NAMEin NetScaler node controller and NetScaler Ingress Controller deployment files should match when they are deployed in the same Kubernetes cluster.- The

CLUSTER_NAMEis used while creating the net profile entity and binding it to service groups on NetScaler VPX or NetScaler MPX.

Validate PBR configuration on NetScaler after deploying the NetScaler node controller

This validation example uses a two-node Kubernetes cluster with NetScaler node controller and NetScaler Ingress Controller deployed.

You can verify that the following configurations are added to the NetScaler by NetScaler node controller:

-

Run the following command:

show netprofile.A net profile is added with the value of

srcIPset to the SNIP added by node controller while creating the VXLAN tunnel network between NetScaler and Kubernetes nodes.

-

Run the following command:

shservicegroup.NetScaler Ingress Controller binds the net profile to the service groups it creates.

-

Run the following command:

show pbr.NetScaler node controller adds PBRs.

Here:

- The number of PBRs is equal to the number of Kubernetes nodes. In this case, it adds two PBRs.

- The

srcIPof the PBR is theSNIPadded by NetScaler node controller in the tunnel network . ThedestIPis the CNI overlay subnet range of the Kubernetes node. TheNextHopis the IP address of Kubernetes node’s VXLAN tunnel interface.

Note:

NetScaler node controller adds PBRs instead of static routes. The rest of the configuration of the VXLAN and bridge table remains the same. For more information, see the NetScaler node controller configuration.