Multi-site application overview

Thinking about providing an optimal user experience for your business applications that are delivered in multiple locations? Doing so can help you to enhance customer satisfaction, brand perception, productivity, and revenue. With the multi-site application feature, you can configure, deliver, and manage applications across multiple cloud environments for high availability and reliability.

A multi-site application provides global load balancing, site failover, and web traffic management across multiple data centers, cloud, or CDNs. It also plays a key role in business use cases, such as disaster recovery, application performance, application availability, and regulatory compliance.

A multi-site application routes network traffic intelligently across an organization’s data centers and public cloud provider networks. To perform this function, a multi-site application monitors the health, availability, and latency for each site. It applies any other policies that have been configured around regulatory requirements.

Benefits

A multi-site application provides the following benefits:

-

Ensures multi-site resiliency and disaster recovery - Disaster recovery capability is critical to your business because downtime is costly. With a multi-site application, you have continuous monitoring for your data center’s availability, health, and responsiveness. A multi-site application redirects the traffic to the closest or best performing data center, or to healthy data centers if there’s an outage.

-

Improves application performance and availability - If web traffic isn’t distributed appropriately across data centers, one site might become oversubscribed while another is underutilized. This can result in poor service for some users and the risk of a service disruption because of overflow. Also, the proximity of the user to the server can impact network latency, making site selection a key element of service quality. By providing intelligent web traffic management, a multi-site application ensures that the load is balanced more evenly across sites while also routing content to each user from the nearest available server to ensure an optimal experience.

-

Increases scalability and agility - A multi-site application solves the problem of limitation of sites and exponential growth of traffic with a greater number of sites. With scalability, you can add, upgrade, and deprovision sites transparently.

-

Reduces latency - High amount of traffic to a website can significantly increase latency. A multi-site application plays a key role in distributing network traffic across several data centers to ensure that no single data center is overloaded with too many requests. It finds the site with the fastest response time (that is, the best network conditions) for each different client through distributed and crowd-sourced round trip time (RTT) measurements, allowing users to be connected to the optimal site.

-

Optimizes user experience - A multi-site application allows you to globally load balance all the traffic, dynamically optimize the user experience, and lower service costs. It routes client requests to the nearest data center. It improves user experience by accelerating application response time. Network latency is minimized by delivering content from a data center, which is closer to the requesting user.

-

Meets regulatory and security requirements - A multi-site application enables you to service a global user base in a manner that complies with government regulations for highly regulated industries such as telecommunications, defense, and healthcare.

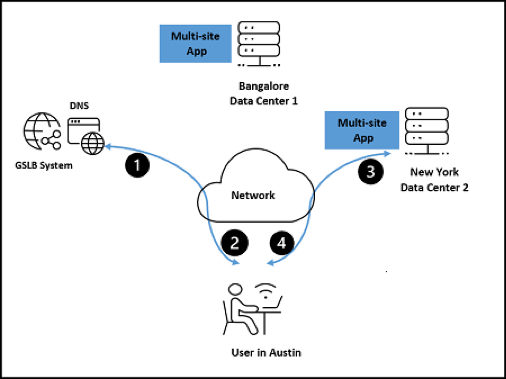

How a multi-site application works

A multi-site application routes traffic across multiple data centers. DNS infrastructure is used to connect the client to the data center. When a client sends a DNS request, the GSLB DNS server identifies the server that best meets the set criteria. The criteria can be one or more of the following:

-

Availability (health) of data center.

-

Response time of the data center.

-

Geofencing rules that might limit certain data centers to specific geographic locations.

-

GSLB distribution algorithm selected.

After the connection is established, the traffic is routed directly between the client and the application.

Example:

Let’s consider the following example to understand how a multi-site application distributes traffic based on the optimal round-trip time (optimal RTT) algorithm. For more information about supported algorithms, see Algorithms.

There are two sites or data centers, one in Bengaluru and another in New York.

-

A user in Austin requests the DNS services hosted by the GSLB to get the IP address of the server hosting the multi-site application.

-

The GSLB DNS server gives the IP address to direct the user to the site that would work best for the user according to the criteria. Typically, based on the optimal RTT algorithm, the user is directed to the New York data center.

-

The user connects to the New York data center.

-

Traffic is established directly between the multi-site application and the user in Austin.

Deployment types

The following deployment types are supported for a multi-site application:

-

Active-active - The multi-site application is deployed in multiple active sites or data centers. If a data center is unreachable, application instances running in the remaining data centers take over the user traffic. This deployment type is ideal when you have a need for global distribution of traffic in a distributed environment, optimized user experience, and reduced latency.

-

Active-passive - The multi-site application is deployed in an active and one or more passive data centers. This deployment type is ideal for disaster recovery. The active location is used to serve a client request. Traffic is routed to the passive data center only when the active data center goes DOWN.

Algorithms

An algorithm controls how a multi-site application directs a client request across distributed sites or data centers. The multi-site application supports the following load balancing algorithms:

-

Failover - The failover algorithm supports a simple routing logic where a site is chosen based on its place in line, and its availability. The failover algorithm helps prevent disruption of access to applications delivered across multiple sites. Select the failover algorithm and specify the priority for each site to configure GSLB sites for high availability. This gives you the flexibility to shift traffic to a backup data center and fail over an entire site. You can create a failover chain that decides which site to select first, second, and so on.

- For example, add primary and standby sites. If the primary site goes DOWN, the traffic is automatically diverted to the standby site. There can be multiple standby sites. Assign a priority of 1 to the primary site and an increasing priority of 2 and above to the standby sites. If the site with priority 1 is DOWN, requests are directed to the site with priority 2. If both the sites are DOWN, the traffic is directed to the site with priority 3, and so on.

-

Round robin - The round robin algorithm distributes the client requests across the sites or data centers sequentially regardless of the load. Select the round robin algorithm and assign different weights to each site. GSLB performs the weighted round robin distribution of incoming connections. The algorithm does this by skipping the lower-weighted services at appropriate intervals. The weights are proportional. You can have three sites with a weight of 2:1:1, 50:25:25, or 90:45:45. In all cases the effect is the same. You can assign weights for the prioritization and selection of each site globally.

- You can globally assign weights for the prioritization and selection of each site. For example, you have three sites selected for your multi-site application: site A, site B, and site C. You have assigned them the weights: 60, 50, and 10, respectively. The round robin algorithm converts these values into percentages, such as site A=50%, site B=42%, and site 3=8% (which adds up to a 100%). This means that 50% of the time, user requests are routed through site A; 42% of the time through site B; and 8% of the time through site C. If all the sites are given the same weight, traffic will be evenly distributed across them over time. If you have only one site, then that site will be used 100% of the time, regardless of the weight you give it. Weights are only used for sites that are available. Unavailable sites cause the distribution to not match the configured weights. For example, if site A is weighted 100 and site B is weighted 1, and if site A is unavailable, all traffic is sent to site B.

-

Optimal RTT - The optimal RTT algorithm measures network proximity. Select the optimal RTT algorithm and specify a penalty to choose the closest healthy data center from a latency perspective. When you specify penalty to a site, you add an extra latency. The additional latency is added to the one calculated by real user measurement (RUM). Penalty is a percentage value that can be applied to a site to modify the RTT, that is, artificially increase the response time (in milliseconds). Increasing or decreasing RTT brings down the performance of the site, such that the likelihood of it being picked is lower.

-

For example, a site might be expensive (hosted in a country where bandwidth or infrastructure cost is higher), and you want to reduce the likelihood of it being picked when an equivalent provider is close enough in terms of performance. So, you put in a penalty value (in percentage) that acts as a multiplier to increase the value of response time, as a result, lowering the probability of that site being picked. Let’s assume that RTT without penalty for site A is 50 milliseconds and for site B is 60 milliseconds. Specify a penalty of two (2) to site A and zero (0) to site B. The RTT for site A would be calculated as follows:

-

Site A RTT with penalty applied = RTT (Round Trip Time in milliseconds) x (1 + Penalty)

= 150 milliseconds

Thus, site A that now has an RTT of 150 milliseconds isn’t selected over site B that continues to have an RTT of 60 milliseconds.

-

-

-

Static Proximity: The static proximity algorithm sends client requests to a site that is geographically nearest to the user location. The algorithm looks up the built-in GeoIP database and determines the client location based on the IP address derived by the DNS query. After identifying the location, the algorithm checks if the site is healthy and in the active state to process client requests. The healthy, active site that is geographically nearest to the user location responds to client requests.

- For example, consider a multi-site application having a healthy, active site in the United States of America and in Singapore. With static proximity, client requests coming from India are sent to the Singapore site as it is geographically nearer.

Monitors (Site health check)

Monitors determine if a site is healthy by sending a health probe to a site. If the site responds, the monitor marks the state of the site as UP. If the site doesn’t respond to the designated number of probes within the specified time, the monitor marks the site as DOWN. No requests are forwarded to this site until its state changes to UP.

You can configure an HTTP, HTTPS, or TCP type monitor, specify a port for the health check, and add a URL path for the HTTP or HTTPS health probes to determine if the site is healthy. Health probes are by default sent to http(s)://hostname/path. You can enter a custom FQDN and path, such as hostname1/path/test, if you want to override the health probes URL to http://hostname1/path/test or https://hostname1/path/test.

Note:

The custom FQDN only overrides the URL related attributes (HTTPS server name indicator and HTTP(S) host header) and not the target IP of the health probe.

Stickiness

Some applications require stickiness between a client and a data center. All requests in a long-lived transaction from a client must be sent to the same data center; otherwise, the application session may be broken, with a negative impact on the client.

Stickiness is accomplished by enabling site persistence. Enabling stickiness ensures that a series of client requests for the multi-site application is sent to the same back-end site instead of being load balanced if this site remains healthy. This enables the clients to remain sticky to a back-end site, even in the face of changing network conditions (for optimal RTT), site health (site with higher priority became healthy again), and round robin decisions.

For example, in an e-commerce website that uses a shopping cart, the server needs to maintain the state of the connection to track the transaction. With stickiness enabled, the client requests are forwarded to the same IP address of the selected data center for all subsequent DNS requests.

If a stickiness session points to a data center that is DOWN, then the configured GSLB method is used to select a new data center.

DNS

Domain Name System (DNS) translates human-readable domain names to machine-readable IP addresses, and vice versa.

The response from a DNS server typically has the IP address of the requested domain. If the IP address of the requested domain is unknown, the same is conveyed in the response.

Authoritative DNS integration

For a multi-site application, you can have your respective DNS records in a multi-site application authoritative DNS zone. This allows you to manage both static records and multi-site application records through Citrix Cloud, without relying on an external DNS registrar.

DNS time to live

The DNS time to live (TTL) indicates how long the DNS response is cached by clients for the multi-site application. The default TTL is 20 seconds. We recommend keeping the default value. Lowering the TTL increases the number of DNS queries, leading to added cost and reduced performance. Increasing the TTL results in clients caching the DNS response for a longer time and a data center might become unhealthy during this time. Therefore, it’s best not to change the default value of TTL.

DNS fallback endpoint

You can add an endpoint that acts as a backup endpoint and responds to DNS queries when all sites associated with a multi-site application are in DOWN state. If the DNS fallback endpoint is left blank, an empty DNS response is sent back to such DNS queries.

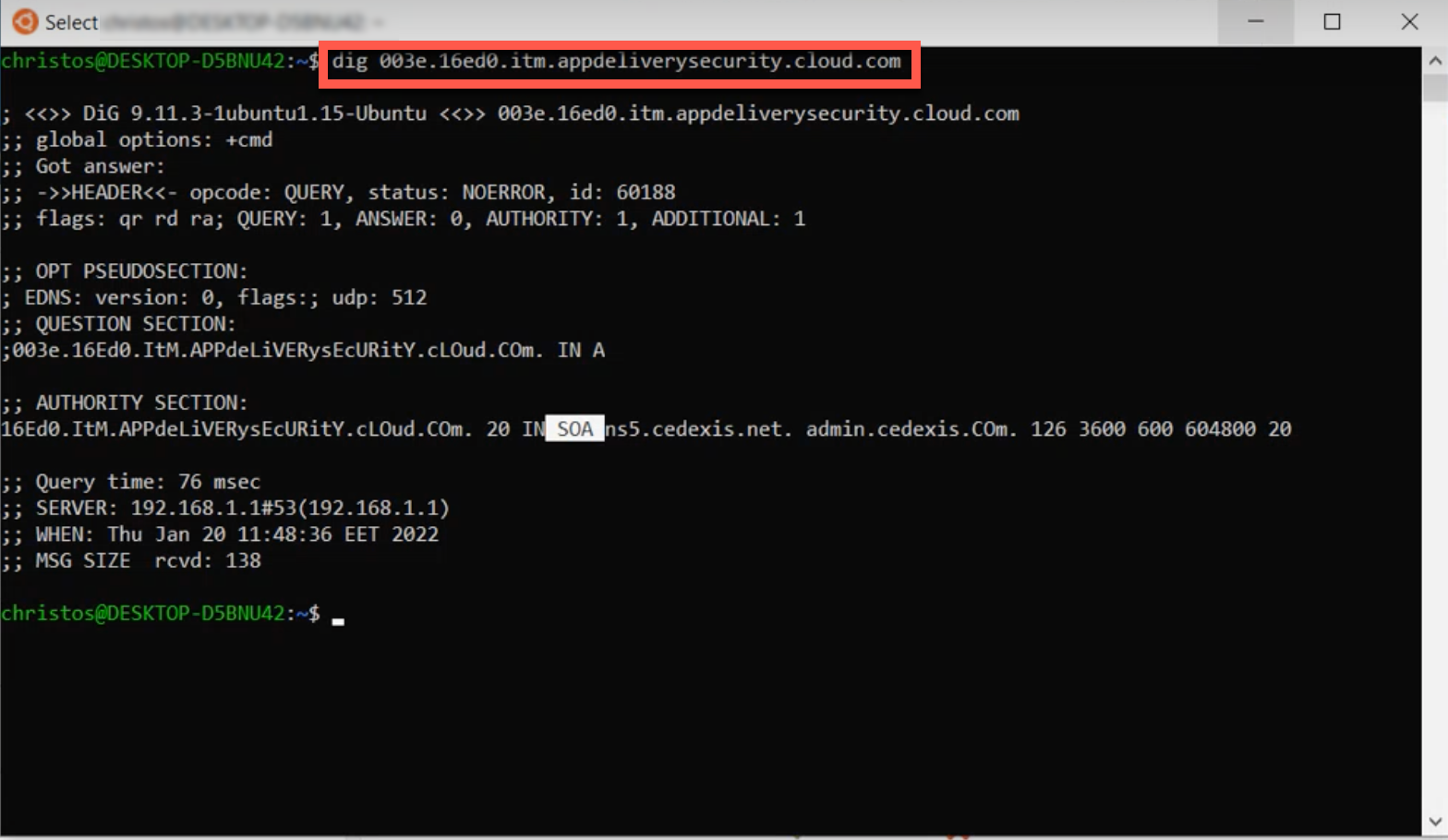

Empty DNS responses

When all sites associated with a multi-site application are in DOWN state, and there is no DNS fallback endpoint configured, the application sends empty DNS responses to clients trying to connect to the server. The responses do not have any IP address record in it. However the response code is successful. Sending empty DNS responses to clients prevents clients from reconnecting to the multi-site application that has all sites in DOWN state.

The application checks the status of sites every 20 seconds and if any of them is back in the UP state, then the responses have IP addresses of the requested domain.

To check if a multi-site application is sending an empty DNS response, run the following command at the command prompt:

dig <FQDN of the multi-site application>

<!--NeedCopy-->

In the output you can see that the DNS response does not contain any IP address record.

Example:

dig 003e.16ed0.itms.appdeliverysecurity.com

<!--NeedCopy-->

Maintenance mode

Sites require periodic maintenance. The maintenance mode feature enables you to plan for a scheduled maintenance or anticipate downtime for performing an upgrade, testing network connectivity, diagnosing an underlying hardware issue, and so on. Once your scheduled maintenance is completed, you can disable the maintenance mode.

Note:

For sites in the maintenance mode, the multi-site application in the dashboard will be marked as under maintenance.

Geofencing

Regulatory compliance differs from country to country. Consider this aspect when delivering applications across multiple geographical locations and data flows across borders. Many organizations face mandates regarding the geographic location of data storage and processing. For example, the general data protection regulation (GDPR) dictates that EU-based users must be served by local servers for certain application requirements.

The multi-site application feature helps you to adhere to regulatory compliance. With the geofencing feature, a multi-site application can be configured to use specific data centers to serve users in specific regions, simplifying compliance with this rule. Based on the countries configured in the Geo IP database, the requests are forwarded to the servers that host content customized for the regions.