-

Getting Started with NetScaler

-

Deploy a NetScaler VPX instance

-

Optimize NetScaler VPX performance on VMware ESX, Linux KVM, and Citrix Hypervisors

-

Apply NetScaler VPX configurations at the first boot of the NetScaler appliance in cloud

-

Configure simultaneous multithreading for NetScaler VPX on public clouds

-

Install a NetScaler VPX instance on Microsoft Hyper-V servers

-

Install a NetScaler VPX instance on Linux-KVM platform

-

Prerequisites for installing NetScaler VPX virtual appliances on Linux-KVM platform

-

Provisioning the NetScaler virtual appliance by using OpenStack

-

Provisioning the NetScaler virtual appliance by using the Virtual Machine Manager

-

Configuring NetScaler virtual appliances to use SR-IOV network interface

-

Configure a NetScaler VPX on KVM hypervisor to use Intel QAT for SSL acceleration in SR-IOV mode

-

Configuring NetScaler virtual appliances to use PCI Passthrough network interface

-

Provisioning the NetScaler virtual appliance by using the virsh Program

-

Provisioning the NetScaler virtual appliance with SR-IOV on OpenStack

-

Configuring a NetScaler VPX instance on KVM to use OVS DPDK-Based host interfaces

-

-

Deploy a NetScaler VPX instance on AWS

-

Deploy a VPX high-availability pair with elastic IP addresses across different AWS zones

-

Deploy a VPX high-availability pair with private IP addresses across different AWS zones

-

Protect AWS API Gateway using the NetScaler Web Application Firewall

-

Configure a NetScaler VPX instance to use SR-IOV network interface

-

Configure a NetScaler VPX instance to use Enhanced Networking with AWS ENA

-

Deploy a NetScaler VPX instance on Microsoft Azure

-

Network architecture for NetScaler VPX instances on Microsoft Azure

-

Configure multiple IP addresses for a NetScaler VPX standalone instance

-

Configure a high-availability setup with multiple IP addresses and NICs

-

Configure a high-availability setup with multiple IP addresses and NICs by using PowerShell commands

-

Deploy a NetScaler high-availability pair on Azure with ALB in the floating IP-disabled mode

-

Configure a NetScaler VPX instance to use Azure accelerated networking

-

Configure HA-INC nodes by using the NetScaler high availability template with Azure ILB

-

Configure a high-availability setup with Azure external and internal load balancers simultaneously

-

Configure a NetScaler VPX standalone instance on Azure VMware solution

-

Configure a NetScaler VPX high availability setup on Azure VMware solution

-

Configure address pools (IIP) for a NetScaler Gateway appliance

-

Deploy a NetScaler VPX instance on Google Cloud Platform

-

Deploy a VPX high-availability pair on Google Cloud Platform

-

Deploy a VPX high-availability pair with external static IP address on Google Cloud Platform

-

Deploy a single NIC VPX high-availability pair with private IP address on Google Cloud Platform

-

Deploy a VPX high-availability pair with private IP addresses on Google Cloud Platform

-

Install a NetScaler VPX instance on Google Cloud VMware Engine

-

-

Solutions for Telecom Service Providers

-

Load Balance Control-Plane Traffic that is based on Diameter, SIP, and SMPP Protocols

-

Provide Subscriber Load Distribution Using GSLB Across Core-Networks of a Telecom Service Provider

-

Authentication, authorization, and auditing application traffic

-

Basic components of authentication, authorization, and auditing configuration

-

Web Application Firewall protection for VPN virtual servers and authentication virtual servers

-

On-premises NetScaler Gateway as an identity provider to Citrix Cloud™

-

Authentication, authorization, and auditing configuration for commonly used protocols

-

Troubleshoot authentication and authorization related issues

-

-

-

-

-

-

Configure DNS resource records

-

Configure NetScaler as a non-validating security aware stub-resolver

-

Jumbo frames support for DNS to handle responses of large sizes

-

Caching of EDNS0 client subnet data when the NetScaler appliance is in proxy mode

-

Use case - configure the automatic DNSSEC key management feature

-

Use Case - configure the automatic DNSSEC key management on GSLB deployment

-

-

-

Persistence and persistent connections

-

Advanced load balancing settings

-

Gradually stepping up the load on a new service with virtual server–level slow start

-

Protect applications on protected servers against traffic surges

-

Retrieve location details from user IP address using geolocation database

-

Use source IP address of the client when connecting to the server

-

Use client source IP address for backend communication in a v4-v6 load balancing configuration

-

Set a limit on number of requests per connection to the server

-

Configure automatic state transition based on percentage health of bound services

-

-

Use case 2: Configure rule based persistence based on a name-value pair in a TCP byte stream

-

Use case 3: Configure load balancing in direct server return mode

-

Use case 6: Configure load balancing in DSR mode for IPv6 networks by using the TOS field

-

Use case 7: Configure load balancing in DSR mode by using IP Over IP

-

Use case 10: Load balancing of intrusion detection system servers

-

Use case 11: Isolating network traffic using listen policies

-

Use case 12: Configure Citrix Virtual Desktops for load balancing

-

Use case 13: Configure Citrix Virtual Apps and Desktops for load balancing

-

Use case 14: ShareFile wizard for load balancing Citrix ShareFile

-

Use case 15: Configure layer 4 load balancing on the NetScaler appliance

-

-

-

-

Authentication and authorization for System Users

-

-

-

Configuring a CloudBridge Connector Tunnel between two Datacenters

-

Configuring CloudBridge Connector between Datacenter and AWS Cloud

-

Configuring a CloudBridge Connector Tunnel Between a Datacenter and Azure Cloud

-

Configuring CloudBridge Connector Tunnel between Datacenter and SoftLayer Enterprise Cloud

-

Configuring a CloudBridge Connector Tunnel Between a NetScaler Appliance and Cisco IOS Device

-

CloudBridge Connector Tunnel Diagnostics and Troubleshooting

This content has been machine translated dynamically.

Dieser Inhalt ist eine maschinelle Übersetzung, die dynamisch erstellt wurde. (Haftungsausschluss)

Cet article a été traduit automatiquement de manière dynamique. (Clause de non responsabilité)

Este artículo lo ha traducido una máquina de forma dinámica. (Aviso legal)

此内容已经过机器动态翻译。 放弃

このコンテンツは動的に機械翻訳されています。免責事項

이 콘텐츠는 동적으로 기계 번역되었습니다. 책임 부인

Este texto foi traduzido automaticamente. (Aviso legal)

Questo contenuto è stato tradotto dinamicamente con traduzione automatica.(Esclusione di responsabilità))

This article has been machine translated.

Dieser Artikel wurde maschinell übersetzt. (Haftungsausschluss)

Ce article a été traduit automatiquement. (Clause de non responsabilité)

Este artículo ha sido traducido automáticamente. (Aviso legal)

この記事は機械翻訳されています.免責事項

이 기사는 기계 번역되었습니다.책임 부인

Este artigo foi traduzido automaticamente.(Aviso legal)

这篇文章已经过机器翻译.放弃

Questo articolo è stato tradotto automaticamente.(Esclusione di responsabilità))

Translation failed!

Use case 7: Configure load balancing in DSR mode by using IP Over IP

You can configure a NetScaler appliance to use direct server return (DSR) mode across Layer 3 networks by using IP tunneling, also called IP over IP configuration. As with standard load balancing configurations for DSR mode, this allows servers to respond to clients directly instead of using a return path through the NetScaler appliance. This improves response time and throughput. As with standard DSR mode, the NetScaler appliance monitors the servers and performs health checks on the application ports.

With IP over IP configuration, the NetScaler appliance and the servers do not need to be on the same Layer 2 subnet. Instead, the NetScaler appliance encapsulates the packets before sending them to the destination server. After the destination server receives the packets, it decapsulates the packets, and then sends its responses directly to the client. This is often referred to as L3DSR.

To configure L3-DSR mode on your NetScaler appliance:

- Create a load balancing virtual server. Set the mode to IPTUNNEL and enable sessionless tracking.

- Create services. Create a service for each back-end application and bind the services to the virtual server.

-

Configure for decapsulation. Configure either a NetScaler appliance or a back-end server to act as a decapsulator.

Note:

When you use a NetScaler appliance, the decapsulation setup is an IP tunnel between the ADC appliances with the back end doing L2DSR to the real servers.

Configure a load balancing virtual server

Configure a virtual server to handle requests to your applications. Assign the service type that matches the service, or use a type of ANY for multiple services. Set the forwarding method to IPTUNNEL and enable the virtual server to operate in sessionless mode. Configure any load balancing method that you want to use.

To create and configure a load balancing virtual server for IP over IP DSR by using the command line interface

At the command prompt type the following command to configure a load balancing virtual server for IP over IP DSR and verify the configuration:

add lb vserver <name> serviceType <serviceType> IPAddress <ip> Port <port> -lbMethod <method> -m <ipTunnelTag> -sessionless [ENABLED | DISABLED]

show lb vserver <name>

<!--NeedCopy-->

Example:

In the following example, we have selected the load balancing method as sourceIPhash and configured sessionless load balancing.

add lb vserver Vserver-LB-1 ANY 1.1.1.80 * -lbMethod SourceIPHash -m IPTUNNEL -sessionless ENABLED

<!--NeedCopy-->

To create and configure a load balancing virtual server for IP over IP DSR by using the GUI

- Navigate to Traffic Management > Load Balancing > Virtual Servers.

- Create a virtual server, and specify Redirection Mode as IP Tunnel Based.

Configure services for IP over IP DSR

After creating your load-balanced server, configure one service for each of your applications. The service handles traffic from the NetScaler appliance to those applications, and allows the NetScaler appliance to monitor the health of each application.

Assign the services to use USIP mode and bind a monitor of type IPTUNNEL to the service for tunnel-based monitoring.

To create and configure a service for IP over IP DSR by using the command line interface

At the command prompt, type the following commands to create a service and optionally, create a monitor and bind it to the service:

add service <serviceName> <serverName> <serviceType> <port> -usip <usip>

add monitor <monitorName> <monitorType> -destip <ip> -iptunnel <iptunnel>

bind service <serviceName> -monitorName <monitorName>

<!--NeedCopy-->

Example:

In the following example, a monitor of type IPTUNNEL is created.

add monitor mon_DSR PING -destip 1.1.1.80 -iptunnel yes

add service svc_DSR01 2.2.2.100 ANY * -usip yes

bind service svc_DSR01 -monitorName mon_DSR

<!--NeedCopy-->

An alternative approach to simplify the routing at both the server and the ADC appliance is to setup both the ADC and server to use an IP from the same subnet. Doing so ensures that any traffic with a destination of a tunnel endpoint is sent over the tunnel. In the example, 10.0.1.0/30 is used.

Note:

The purpose of the monitor is to ensure that the tunnel is active by reaching the loopback of each server through the IP tunnel. If the service is not up, verify whether the outer IP routing between ADC and server is good. Also verify whether the inner IP addresses are reachable through the IP tunnel. Routes might be required on the server, or PBR is added to ADC depending on the chosen implementation.

Example:

add ns ip 10.0.1.2 255.255.255.252 -vServer DISABLED

add netProfile netProfile_DSR -srcIP 10.0.1.2

add lb monitor mon_DSR PING -LRTM DISABLED -destIP 1.1.1.80 -ipTunnel YES -netProfile netProfile_DSR

<!--NeedCopy-->

To configure a monitor by using the GUI

- Navigate to Traffic Management > Load Balancing > Monitors.

- Create a monitor, and select IP Tunnel.

To create and configure a service for IP over IP DSR by using the GUI

- Navigate to Traffic Management > Load Balancing > Services.

- Create a service and, in Settings tab, select Use Source IP Address.

To bind a service to a load balancing virtual server by using the command line interface

At the command prompt type the following command:

bind lb vserver <name> <serviceName>

<!--NeedCopy-->

Example:

bind lb vserver Vserver-LB-1 Service-DSR-1

<!--NeedCopy-->

To bind a service to a load balancing virtual server by using the GUI

- Navigate to Traffic Management > Load Balancing > Virtual Servers.

- Open a virtual server, and click in the Services section to bind a service to the virtual server.

Using the client IP address in the Outer header of tunnel packets

The NetScaler supports using the client-source IP address as the source IP address in the outer header of tunnel packets related to direct server return mode using IP tunneling. This feature is supported for DSR with IPv4 and DSR with IPv6 tunneling modes. For enabling this feature, enable the use client source IP address parameter for IPv4 or IPv6. This setting is applied globally to all the DSR configurations that use IP tunneling.

To use a client-source IP address as the source IP address by using the CLI

At the command prompt, type:

set iptunnelparam -useclientsourceip [YES | NO]show iptunnelparam

To use client source IP address as the source IP address by using the GUI

- Navigate to System > Network.

- In Settings tab, click IPv4 Tunnel Global Settings.

- In the Configure IPv4 Tunnel Global Parameters page, select Use Client Source IP check box.

- Click OK.

To use client source IP address as the source IP address by using the CLI

At the command prompt, type:

set ip6tunnelparam -useclientsourceip [YES | NO]show ip6tunnelparam

To use client source IP address as the source IP address by using the GUI

- Navigate to System > Network.

- In Settings tab, click IPv6 Tunnel Global Settings.

- In the Configure IPv6 Tunnel Global Parameters page, select Use Client Source IP check box.

- Click OK.

Decapsulation configuration

You can configure either a NetScaler appliance or a back-end server as a decapsulation.

NetScaler decapsulation

When a NetScaler appliance is used as a decapsulation, an IP tunnel must be created in the NetScaler appliance. For more details, see Configuring IP Tunnels.

The NetScaler decapsulation setup consists of the following two virtual servers:

- The first virtual server receives the encapsulated packet and removes the outer IP encapsulation.

- The second virtual server has the IP of the original service on the front-end ADC and uses MAC translation to forward the packet towards the back end by using the MAC address of the bound services. This setup is typically known as L2DSR. Ensure to disable ARP on this virtual server.

Example setup:

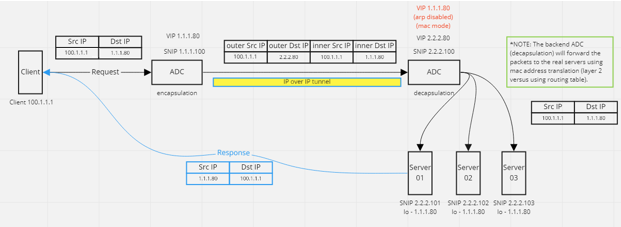

The following illustration shows a decapsulation setup using the ADC appliances.

The complete configuration required for the setup is as follows.

Front-end ADC configuration:

add service svc_DSR01 2.2.2.80 ANY * -usip YES -useproxyport NO

add lb vserver vip_DSR_ENCAP ANY 1.1.1.80 * -lbMethod SOURCEIPHASH -m IPTUNNEL -sessionless ENABLED

bind lb vserver vip_DSR_ENCAP svc_DSR01

<!--NeedCopy-->

Back-end ADC configuration:

add ipTunnel DSR-IPIP 1.1.1.100 255.255.255.255 *

add service svc_DSR01_01 2.2.2.101 ANY * -usip YES -useproxyport NO

add service svc_DSR01_02 2.2.2.102 ANY * -usip YES -useproxyport NO

add service svc_DSR01_03 2.2.2.103 ANY * -usip YES -useproxyport NO

add lb vserver vs_DSR_DECAP ANY 2.2.2.80 * -lbMethod SOURCEIPHASH -m IPTUNNEL -sessionless ENABLED -netProfile netProf_DSR_MBF_noIP

add ns ip 1.1.1.80 255.255.255.255 -type VIP -arp DISABLED -snmp DISABLED

add lb vserver vs_DSR_Relay ANY 1.1.1.80 * -lbMethod SOURCEIPHASH -m MAC -sessionless ENABLED

bind lb vserver vs_DSR_DECAP svc_DSR01_01

bind lb vserver vs_DSR_DECAP svc_DSR01_02

bind lb vserver vs_DSR_DECAP svc_DSR01_03

bind lb vserver vip_DSR_Relay svc_DSR01_01

bind lb vserver vip_DSR_Relay svc_DSR01_02

bind lb vserver vip_DSR_Relay svc_DSR01_03

add netProfile netProf_DSR_MBF_noIP -MBF ENABLED

add lb monitor mon_DSR_MAC PING -netProfile netProf_DSR_MBF_noIP

bind service svc_DSR01_01 -monitorName mon_DSR_MAC

bind service svc_DSR01_02 -monitorName mon_DSR_MAC

bind service svc_DSR01_03 -monitorName mon_DSR_MAC

<!--NeedCopy-->

The following example shows a testing setup using Ubuntu and Red Hat servers running apache2. These commands are set up on each back-end server.

sudo ip addr add 1.1.1.80 255.255.255.255 dev lo

sudo sysctl net.ipv4.conf.all.arp_ignore=1

sudo sysctl net.ipv4.conf.all.arp_announce=2

sudo sysctl net.ipv4.conf.eth4.rp_filter=2 (The interface has the external IP with route towards the ADC)

sudo sysctl net.ipv4.conf.all.forwarding=1

sudo ip link set dev lo arp on

<!--NeedCopy-->

Back-end server decapsulation

When you use the back-end servers as a decapsulation, the back-end configuration varies depending upon the server OS type. You can configure a back-end server as a decapsulation by following these steps:

- Configure a loop back interface with IP for service IP.

- Create a tunnel interface.

- Add a route through tunnel interface.

- Configure interface settings as required for traffic.

Note:

Windows OS servers cannot do IP tunneling natively, so the commands are provided as examples for Linux based systems. Third-party plug-ins are available for Windows OS servers, however, that is outside the scope of this example.

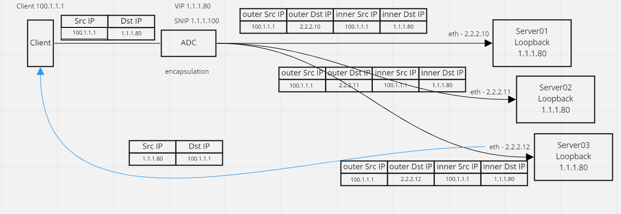

The following illustration shows a decapsulation setup using the back-end servers.

Example configuration:

In this example, 1.1.1.80 is the NetScaler virtual IP (VIP) address and 2.2.2.10-2.2.2.12 are the back-end server IP addresses. The VIP address is configured in the loopback interface, and a route is added through the tunnel interface. The monitors use the server IP, and tunnel the monitor packets over the IP tunnel using the tunnel endpoints.

The complete configuration required for the setup is as follows.

Front-end ADC configuration:

The following configuration creates a monitor that uses the tunnel endpoint as source. Then, send pings over tunnel to service IP address.

add ns ip 10.0.1.2 255.255.255.252 -vServer DISABLED

add netProfile netProfile_DSR -srcIP 10.0.1.2

add lb monitor mon_DSR PING -LRTM DISABLED -destIP 1.1.1.80 -ipTunnel YES -netProfile netProfile_DSR

<!--NeedCopy-->

The following configuration creates a VIP for service that uses the original source IP address. Then, forwards traffic over IP tunnel to back-end servers.

add service svc_DSR01 2.2.2.10 ANY * -usip YES -useproxyport NO

bind service svc_DSR01 -monitorName mon_DSR

add service svc_DSR02 2.2.2.11 ANY * -usip YES -useproxyport NO

bind service svc_DSR02 -monitorName mon_DSR

add service svc_DSR03 2.2.2.12 ANY * -usip YES -useproxyport NO

bind service svc_DSR03 -monitorName mon_DSR

add lb vserver vip_DSR_ENCAP ANY 1.1.1.80 * -lbMethod SOURCEIPHASH -m IPTUNNEL -sessionless ENABLED

bind lb vserver vip_DSR_ENCAP svc_DSR01

bind lb vserver vip_DSR_ENCAP svc_DSR02

bind lb vserver vip_DSR_ENCAP svc_DSR03

<!--NeedCopy-->

Back-end server configuration of each server:

The following commands are required for the back-end server to receive the IPIP packet, remove the outer encapsulation, then respond from the loopback to the original client IP. Doing so ensures the IP addresses in the packet received by the client match the IP addresses in the original request.

modprobe ipip

sudo ip addr add 1.1.1.80 255.255.255.255 dev lo

nmcli connection add type ip-tunnel ip-tunnel.mode ipip con-name tun0

ifname tun0 remote 198.51.100.5 local 203.0.113.10

nmcli connection modify tun0 ipv4.addresses '10.0.1.1/30'

nmcli connection up tun0

sudo sysctl net.ipv4.conf.all.arp_ignore=1

sudo sysctl net.ipv4.conf.all.arp_announce=2

sudo sysctl net.ipv4.conf.tun0.rp_filter=2

sudo sysctl net.ipv4.conf.all.forwarding=1

sudo ip link set dev lo arp off

<!--NeedCopy-->

Share

Share

This Preview product documentation is Cloud Software Group Confidential.

You agree to hold this documentation confidential pursuant to the terms of your Cloud Software Group Beta/Tech Preview Agreement.

The development, release and timing of any features or functionality described in the Preview documentation remains at our sole discretion and are subject to change without notice or consultation.

The documentation is for informational purposes only and is not a commitment, promise or legal obligation to deliver any material, code or functionality and should not be relied upon in making Cloud Software Group product purchase decisions.

If you do not agree, select I DO NOT AGREE to exit.