-

Getting Started with NetScaler

-

Deploy a NetScaler VPX instance

-

Optimize NetScaler VPX performance on VMware ESX, Linux KVM, and Citrix Hypervisors

-

Apply NetScaler VPX configurations at the first boot of the NetScaler appliance in cloud

-

Configure simultaneous multithreading for NetScaler VPX on public clouds

-

Install a NetScaler VPX instance on Microsoft Hyper-V servers

-

Install a NetScaler VPX instance on Linux-KVM platform

-

Prerequisites for installing NetScaler VPX virtual appliances on Linux-KVM platform

-

Provisioning the NetScaler virtual appliance by using OpenStack

-

Provisioning the NetScaler virtual appliance by using the Virtual Machine Manager

-

Configuring NetScaler virtual appliances to use SR-IOV network interface

-

Configure a NetScaler VPX on KVM hypervisor to use Intel QAT for SSL acceleration in SR-IOV mode

-

Configuring NetScaler virtual appliances to use PCI Passthrough network interface

-

Provisioning the NetScaler virtual appliance by using the virsh Program

-

Provisioning the NetScaler virtual appliance with SR-IOV on OpenStack

-

Configuring a NetScaler VPX instance on KVM to use OVS DPDK-Based host interfaces

-

-

Deploy a NetScaler VPX instance on AWS

-

Deploy a VPX high-availability pair with elastic IP addresses across different AWS zones

-

Deploy a VPX high-availability pair with private IP addresses across different AWS zones

-

Protect AWS API Gateway using the NetScaler Web Application Firewall

-

Configure a NetScaler VPX instance to use SR-IOV network interface

-

Configure a NetScaler VPX instance to use Enhanced Networking with AWS ENA

-

Deploy a NetScaler VPX instance on Microsoft Azure

-

Network architecture for NetScaler VPX instances on Microsoft Azure

-

Configure multiple IP addresses for a NetScaler VPX standalone instance

-

Configure a high-availability setup with multiple IP addresses and NICs

-

Configure a high-availability setup with multiple IP addresses and NICs by using PowerShell commands

-

Deploy a NetScaler high-availability pair on Azure with ALB in the floating IP-disabled mode

-

Configure a NetScaler VPX instance to use Azure accelerated networking

-

Configure HA-INC nodes by using the NetScaler high availability template with Azure ILB

-

Configure a high-availability setup with Azure external and internal load balancers simultaneously

-

Configure a NetScaler VPX standalone instance on Azure VMware solution

-

Configure a NetScaler VPX high availability setup on Azure VMware solution

-

Configure address pools (IIP) for a NetScaler Gateway appliance

-

Deploy a NetScaler VPX instance on Google Cloud Platform

-

Deploy a VPX high-availability pair on Google Cloud Platform

-

Deploy a VPX high-availability pair with external static IP address on Google Cloud Platform

-

Deploy a single NIC VPX high-availability pair with private IP address on Google Cloud Platform

-

Deploy a VPX high-availability pair with private IP addresses on Google Cloud Platform

-

Install a NetScaler VPX instance on Google Cloud VMware Engine

-

-

Solutions for Telecom Service Providers

-

Load Balance Control-Plane Traffic that is based on Diameter, SIP, and SMPP Protocols

-

Provide Subscriber Load Distribution Using GSLB Across Core-Networks of a Telecom Service Provider

-

Authentication, authorization, and auditing application traffic

-

Basic components of authentication, authorization, and auditing configuration

-

Web Application Firewall protection for VPN virtual servers and authentication virtual servers

-

On-premises NetScaler Gateway as an identity provider to Citrix Cloud™

-

Authentication, authorization, and auditing configuration for commonly used protocols

-

Troubleshoot authentication and authorization related issues

-

-

-

-

-

-

Configure DNS resource records

-

Configure NetScaler as a non-validating security aware stub-resolver

-

Jumbo frames support for DNS to handle responses of large sizes

-

Caching of EDNS0 client subnet data when the NetScaler appliance is in proxy mode

-

Use case - configure the automatic DNSSEC key management feature

-

Use Case - configure the automatic DNSSEC key management on GSLB deployment

-

-

-

Persistence and persistent connections

-

Advanced load balancing settings

-

Gradually stepping up the load on a new service with virtual server–level slow start

-

Protect applications on protected servers against traffic surges

-

Retrieve location details from user IP address using geolocation database

-

Use source IP address of the client when connecting to the server

-

Use client source IP address for backend communication in a v4-v6 load balancing configuration

-

Set a limit on number of requests per connection to the server

-

Configure automatic state transition based on percentage health of bound services

-

-

Use case 2: Configure rule based persistence based on a name-value pair in a TCP byte stream

-

Use case 3: Configure load balancing in direct server return mode

-

Use case 6: Configure load balancing in DSR mode for IPv6 networks by using the TOS field

-

Use case 7: Configure load balancing in DSR mode by using IP Over IP

-

Use case 10: Load balancing of intrusion detection system servers

-

Use case 11: Isolating network traffic using listen policies

-

Use case 12: Configure Citrix Virtual Desktops for load balancing

-

Use case 13: Configure Citrix Virtual Apps and Desktops for load balancing

-

Use case 14: ShareFile wizard for load balancing Citrix ShareFile

-

Use case 15: Configure layer 4 load balancing on the NetScaler appliance

-

-

-

-

Authentication and authorization for System Users

-

-

-

Configuring a CloudBridge Connector Tunnel between two Datacenters

-

Configuring CloudBridge Connector between Datacenter and AWS Cloud

-

Configuring a CloudBridge Connector Tunnel Between a Datacenter and Azure Cloud

-

Configuring CloudBridge Connector Tunnel between Datacenter and SoftLayer Enterprise Cloud

-

Configuring a CloudBridge Connector Tunnel Between a NetScaler Appliance and Cisco IOS Device

-

CloudBridge Connector Tunnel Diagnostics and Troubleshooting

This content has been machine translated dynamically.

Dieser Inhalt ist eine maschinelle Übersetzung, die dynamisch erstellt wurde. (Haftungsausschluss)

Cet article a été traduit automatiquement de manière dynamique. (Clause de non responsabilité)

Este artículo lo ha traducido una máquina de forma dinámica. (Aviso legal)

此内容已经过机器动态翻译。 放弃

このコンテンツは動的に機械翻訳されています。免責事項

이 콘텐츠는 동적으로 기계 번역되었습니다. 책임 부인

Este texto foi traduzido automaticamente. (Aviso legal)

Questo contenuto è stato tradotto dinamicamente con traduzione automatica.(Esclusione di responsabilità))

This article has been machine translated.

Dieser Artikel wurde maschinell übersetzt. (Haftungsausschluss)

Ce article a été traduit automatiquement. (Clause de non responsabilité)

Este artículo ha sido traducido automáticamente. (Aviso legal)

この記事は機械翻訳されています.免責事項

이 기사는 기계 번역되었습니다.책임 부인

Este artigo foi traduzido automaticamente.(Aviso legal)

这篇文章已经过机器翻译.放弃

Questo articolo è stato tradotto automaticamente.(Esclusione di responsabilità))

Translation failed!

Kubernetes Ingress solution

This topic provides an overview of the Kubernetes Ingress solution provided by NetScaler® and explains the benefits.

What is Kubernetes Ingress?

When you are running an application inside a Kubernetes cluster, you need to provide a way for external users to access the applications from outside the Kubernetes cluster. Kubernetes provides an object called Ingress that provides the most effective way to expose multiple services using a stable IP address. A Kubernetes ingress object is always associated with one or more services and acts as a single-entry point for external users to access services running inside the cluster.

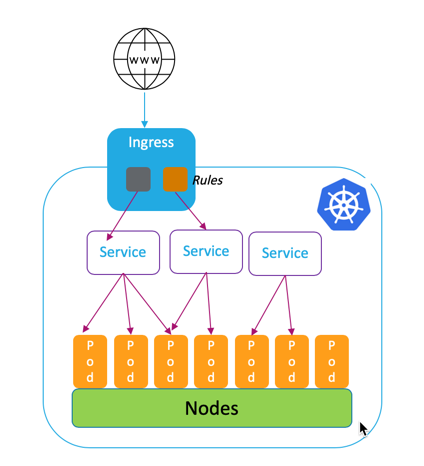

The following diagram explains how Kubernetes Ingress works.

Kubernetes Ingress implementation consists of the following components:

-

Ingress resource. An Ingress resource allows you to define rules for accessing the applications from outside of the cluster.

-

Ingress controller. An Ingress controller is an application deployed inside the cluster that interprets rules defined in the Ingress. Ingress controller converts the Ingress rules into configuration instructions for a load balancing application integrated with the cluster. The load balancer can be a software application running inside your Kubernetes cluster or a hardware appliance running outside the cluster.

-

Ingress device. An Ingress device is a load balancing application like NetScaler CPX, VPX, or MPX which performs load balancing according to the configuration instructions provided by the Ingress controller.

What is the Kubernetes Ingress solution from NetScaler?

In this solution, NetScaler provides an implementation of Kubernetes Ingress controller to manage and route traffic into your Kubernetes cluster using NetScaler ADCs (NetScaler CPX, VPX, or MPX). The NetScaler Ingress Controller integrates NetScaler ADCs with your Kubernetes environment and configures NetScaler CPX, VPX, or MPX according to the Ingress rules.

Standard Kubernetes Ingress solutions provide load balancing only at layer 7 (HTTP or HTTPS traffic). Some times, you need to expose many legacy applications which rely on TCP or UDP or applications and need a way to load balance those applications. NetScaler Ingress Controller solution provides TCP, TCP-SSL, and UDP traffic support apart from the standard HTTP or HTTPS Ingress. Also, it works seamlessly across multiple clouds or on-premises data centers.

NetScaler provides enterprise-grade traffic management policies like rewrite and responder policies for efficiently load balancing traffic at layer 7. However, Kubernetes Ingress lacks such enterprise-grade traffic management policies. With the Kubernetes Ingress solution from NetScaler, you can apply rewrite and responder policies for application traffic in a Kubernetes environment using CRDs provided by NetScaler.

The Kubernetes Ingress solution from NetScaler also supports automated canary deployment for your CI/CD application pipeline. In this solution, NetScaler is integrated with the Spinnaker platform and acts as a source for providing accurate metrics for analyzing Canary deployment using Kayenta. After analyzing the metrics, Kayenta generates an aggregate score for the canary and decides to promote or fail the Canary version. You can also regulate traffic distribution to the Canary version using the NetScaler policy infrastructure.

The following table summarizes the benefits offered by the Ingress solution from NetScaler over Kubernetes Ingress.

| Features | Kubernetes Ingress | Ingress Solution from NetScaler |

|---|---|---|

| HTTP and HTTPs support | Yes | Yes |

| URL routing | Yes | Yes |

| TLS | Yes | Yes |

| Load balancing | Yes | Yes |

| TCP, TCP-SSL | No | Yes |

| UDP | No | Yes |

| HTTP/2 | Yes | Yes |

| Automated canary deployment support with CI/CD tools | No | Yes |

| Support for applying NetScaler rewrite and responder policies | No | Yes |

| Authentication (Open Authorization (OAuth), mutual TLS (mTLS)) | No | Yes |

| Support for applying NetScaler Rate Limiting policies | No | Yes |

Deployment options for Kubernetes Ingress solution

Kubernetes Ingress solution from NetScaler provides you flexible architecture depending on how you want to manage your NetScaler ADCs and Kubernetes environment.

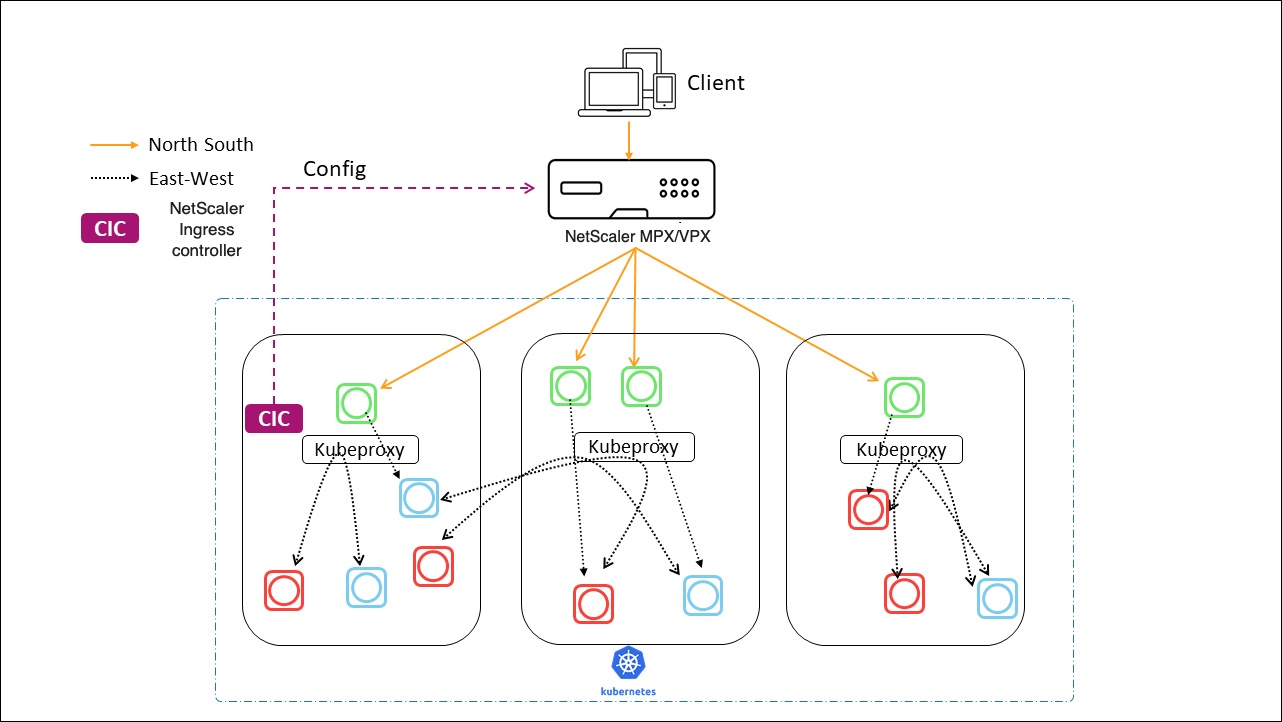

Unified Ingress (single-tier)

In a unified Ingress (single-tier) architecture, a NetScaler MPX or VPX device deployed outside the Kubernetes cluster is integrated with the Kubernetes environment using the NetScaler Ingress Controller. The NetScaler Ingress Controller is deployed as a pod in the Kubernetes cluster and automates the configuration of NetScaler ADCs based on changes to the microservices or the Ingress resources. The NetScaler device performs functions like load balancing, TLS termination, and HTTP or TCP protocol optimizations on inbound traffic and then routes the traffic to the correct microservice within a Kubernetes cluster. This architecture suits best in scenarios where the same team manages the Kubernetes platform and other networking infrastructure including application delivery controllers (ADCs).

The following diagram shows a deployment using the unified Ingress architecture.

A unified Ingress solution provides the following key benefits:

- Provides a way to extend the capabilities of your existing NetScaler infrastructure to the Kubernetes environment

- Enables you to apply traffic management policies for inbound traffic

- Provides a simplified architecture suitable for network-savvy DevOps teams

- Supports multitenancy

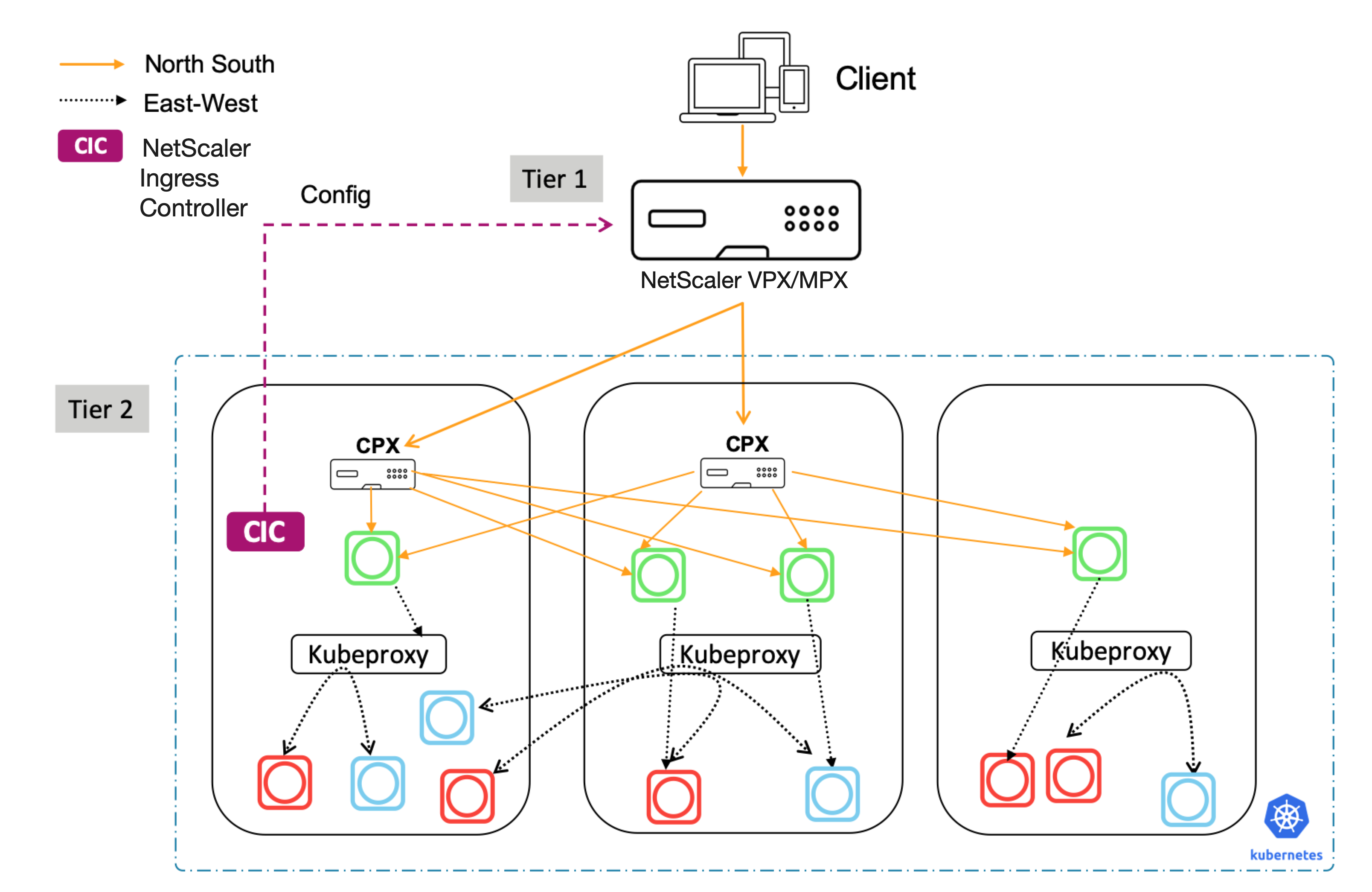

Dual-tier Ingress

In a dual-tier architecture, NetScaler (MPX or VPX) deployed outside the Kubernetes cluster acts at tier 1 and load balances North-South traffic to NetScaler CPXs running inside the cluster. NetScaler CPX acts at tier 2 and performs load balancing for microservices inside the Kubernetes cluster.

In scenarios where separate teams manage the Kubernetes platform and the network infrastructure, the dual-tier architecture is most suitable.

Networking teams use tier 1 NetScaler for use cases such as GSLB, TLS termination on the hardware platform, and TCP load balancing. Kubernetes platform teams can use tier 2 NetScaler (CPX) for Layer 7 (HTTP/HTTPS) load balancing, mutual TLS, and observability or monitoring of microservices. The tier 2 NetScaler (CPX) can have a different software release version than the tier 1 NetScaler to accommodate newly available capabilities.

The following diagram shows a deployment with dual-tier architecture.

A dual-tier Ingress provides the following key benefits:

- Ensures high velocity of application development for developers or platform teams

- Enables applying developer driven traffic management policies for microservices inside the Kubernetes cluster

- Enables cloud scale and multitenancy

For more information, see the NetScaler Ingress Controller documentation.

Getting started

To get started with the Kubernetes Ingress solution from NetScaler, you can try out the following examples:

Share

Share

This Preview product documentation is Cloud Software Group Confidential.

You agree to hold this documentation confidential pursuant to the terms of your Cloud Software Group Beta/Tech Preview Agreement.

The development, release and timing of any features or functionality described in the Preview documentation remains at our sole discretion and are subject to change without notice or consultation.

The documentation is for informational purposes only and is not a commitment, promise or legal obligation to deliver any material, code or functionality and should not be relied upon in making Cloud Software Group product purchase decisions.

If you do not agree, select I DO NOT AGREE to exit.